Explaining My Paper Arguing For The Self-Indication Assumption

Alternatives are doomed!

So as some of you may have heard, I recently got a paper published for the journal Synthese. I know, I know, I’m exactly that cool! Sorry ladies—all roughly 2 of you that read the blog—I’m taken.

In the paper, I argued for the self-indication assumption in anthropics. That’s the view that says that your existence gives you evidence that there are more people—so, for example, if a coin was flipped that would create 10 people if it came up heads and only one if it came up tails, and you get created from the coin, you should think that it’s 10 times as likely it came up heads than tails.

The paper is about anthropic reasoning—how to do probabilistic reasoning about your existence. So it’s basically about probability, which is basically statistics, so now I plan to begin every future sentence with “statistician here.”

Statistician here, I thought that you might want to hear a summary of the points I make in the paper (66% probability). And if the argument is right and the universe is filled with uncountable infinite people, then it’s quite likely that an infinite number of people have said nice things about the paper including that it’s beautiful, wonderful, powerful, profound, that it’s fixed their marriage, and that it’s the only thing stopping a nuclear-armed Iran.1

In the paper, I argue for a very modest thesis: that every alternative to the self-indication assumption is crazy and that the main objection to the self-indication assumption is bunk!

My argument for the self-indication is simple—every view other than the self-indication assumption implies crazy things. To see this, imagine that Adam is in the garden of Eden but he doesn’t know that he’s the first guy. He knows, however, that the first guy either has one offspring—in which case there are 1 trillion people, as the offspring have offspring—or the first guy has no offspring, in which case it’s just him.

Let’s say Adam thinks that there’s around a 50% chance the first guy would have kids. Because Adam gets his news from snakes, he doesn’t accept the self-indication assumption—perhaps Snakes like the SSSSSSSSSSSSSSSSSSSSSSSSSSSSA (or some other view of anthropics—the argument works no matter what non-SIA view a person has, whether or not it’s the self-sampling assumption). As a result, he doesn’t think his existence is more likely if there are more people. So Adam reasons: “that the first guys has kids is just as likely as that the first guy has no kids. However, if the first guy doesn’t have any kids then there’s only one total guy, so it’s guaranteed I’d be the first person. In contrast, if the first guy has kids and there are a trillion people, I’m equally likely to be any of the trillion people, so therefore, the odds I’d be the first guy is 1 in a trillion.”

Suddenly, he hears a booming voice from the heavens declaring “you are Adam—the first human.” Well because the odds he’d be the first guy are 1/1 trillion if the first guy has kids but 100% if the first guy has no kids, he now gets trillion to one update in favor of the first guy not having any kids. But wait—he knows he’s the first guy. So now he thinks it’s a trillion times likelier he’d have no kids as that he’d have kids.

But now things get crazy. He now has maximally safe birth control—the odds of Eve getting pregnant are just a bit over 1 in a trillion. In fact, if he agreed to reliably procreate unless a deer dropped dead at his feet, he could be super confident that a deer would drop dead at his feet. If he’d have tons of kids unless he got a royal flush in poker, he could be confident he’d get a royal flush in poker. But this is crazy.

So alternative to the self-indication assumption must, on pain of violating Bayesian conditionalization, think that if the first human learned that he was the first human, then he could be unbelievably confident that he wouldn’t have kids. It gets even worse: these views imply that if you didn’t know when you were born and then learned you’re one of the first 110 billion people, you could be quite confident the future wouldn’t last much longer. If the future would have googol more people unless someone gets 4 consecutive royal flushes in poker, you could be super confident that they’d get 4 back-to-back royal flushes—because if there are googol people, it’s very unlikely that you’d be one of the first 110 billion.

Note how elegantly the self-indication assumption cancels this out. It agrees that upon finding you’re one of the first N people, you get X to 1 evidence that there are N people rather than XN people, but it says you should start out thinking the odds there are XN people are X times higher than the odds there are N people, so the odds precisely cancel out. As a result, SIA agrees with the intuitive verdict: no matter how many people the future will have unless you get a royal flush, the odds of getting a royal flush are still 1 in 649,739.

For this reason, only SIA is consistent with the idea that if there’s some event that has an objective probability of R%—and the only differences between it happening and it not happening are in terms of what will happen in the future—your credence in it happening should be R%. The objective probability of an event is what % of the time that event will happen if the trial is repeated: for example, the objective probability of a coin coming up heads is 50%, because if you keep flipping coins, they will get arbitrarily close to being half heads.

This idea is pretty intuitive. If I flip a coin, and a city will be destroyed if it comes up heads, I should still only think there’s a 50% chance that it will come up heads. The odds I should give to a coinflip don’t depend on what will happen in the future because of the coin flip turning out a certain way. But if this is true, then the self-indication assumption is vindicated: only it can hold, consistent with Bayesian updating, that the odds of getting a royal flush in poker don’t depend on how many kids you’ll have if you get a royal flush in poker.

Okay, so so far we’ve established that views other than the self-indication imply the correctness of the serpent’s reasoning in the following case called Serpent’s Advice:

Eve and Adam, the first two humans, knew that if they gratified their flesh, Eve might bear a child, and if she did, they would be expelled from Eden and would go on to spawn billions of progeny that would cover the Earth with misery. One day a serpent approached the couple and spoke thus: “Pssst! If you embrace each other, then either Eve will have a child or she won’t. If she has a child then you will have been among the first two out of billions of people. Your con-ditional probability of having such early positions in the human species given this hypothesis is extremely small. If, one the other hand, Eve doesn’t become pregnant then the conditional probability, given this, of you being among the first two humans is equal to one. By Bayes’s theorem, the risk that she will have a child is less than one in a billion. Go forth, indulge, and worry not about the consequences!”

It also implies the correctness of the following reasoning in what’s called the Lazy Adam case:

Assume as before that Adam and Eve were once the only people and that they know for certain that if they have a child they will be driven out of Eden and will have billions of descendants. But this time they have a foolproof way of generating a child, perhaps using advanced in vitro fertilization. Adam is tired of getting up every morning to go hunting. Together with Eve, he devises the following scheme: They form the firm intention that unless a wounded deer limps by their cave, they will have a child. Adam can then put his feet up and rationally expect with near certainty that a wounded dear– an easy target for his spear– will soon stroll by.

In both cases, Adam can be confident he’ll get what he wants simply because if he doesn’t, he’ll have a lot of kids. Clearly, this is nuts. It’s both counterintuitive and contradicts the principle that I described above, that your credence in some event should equal its objective probability if the only differences between the ways it turns out will be in terms of what will happen in the future (e.g. you shouldn’t think a coin will probably come up heads because if it comes up tails a city will be destroyed).

You’d think this would be enough to level views other than SIA. But Nick Bostrom doesn’t think so—he argues the Lazy Adam case isn’t so bad because “it is in the nature of probabilistic reasoning that some people using it, if they are in unusual circumstances, will be misled.” But the problem isn’t just that they’d be misled—it’s that, on such a picture, they should have utterly crazy credences.

Imagine if a person thought that if you were on a mountain with a Genghis Khan in the year 1400 you should expect a coin that’s flipped to come up with heads with 99% certainty because it would be nice if it came up heads. Even though the situation is unusual, that would still be a crazy thing to think! Similarly, it’s crazy to think that you should be supremely confident that you’ll get a royal flush in poker because if you don’t, you’ll have a lot of kids (and it also conflicts with the principle of probability that I described before).

When reading Bostrom say this, I was flabbergasted. He just seemed to not get what was nutty about the result in the Lazy Adam case. It would be like if you found out your view commits you to thinking that everyone who feels deep in their heart that they’ll get a royal flush should think they’ll get a royal flush, and your response was “well, even people following the right theory of probability will be wrong sometimes!”

Okay, so now that we’ve established that there’s a knockdown argument for the self-indication assumption, I argue that this argument shows that thirding is correct in Sleeping Beauty. For those that don’t know the sleeping beauty problem is the following:

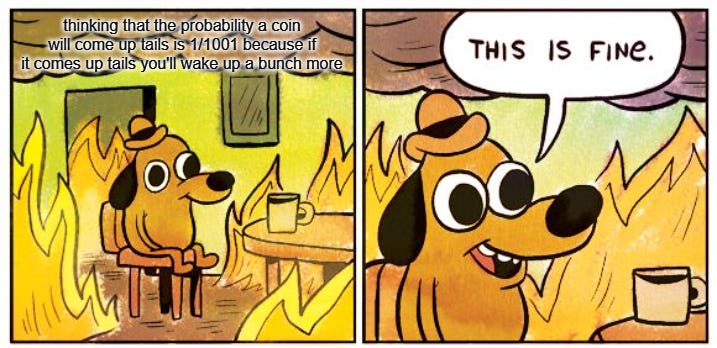

The Sleeping Beauty problem: Some researchers are going to put you to sleep. During the two days that your sleep will last, they will briefly wake you up either once or twice, depending on the toss of a fair coin (Heads: once; Tails: twice). After each waking, they will put you to back to sleep with a drug that makes you forget that waking. When you are first awakened, to what degree ought you believe that the outcome of the coin toss is Heads?

There are two main answers: a half and a third. Thirders think that because you wake up twice if the coin comes up tails, upon waking up, you should think tails is twice as likely as heads. Thirders are right—let’s see why!

Let’s modify the numbers, imagine that if the coin comes up tails, the person wakes up 1,000 times. Halfers would still think that, upon waking up, one should think that heads and tails are equally likely. However, if the coin came up tails, the odds it’s currently the first day 1/1000, while they’re 100% if the coin came up heads.

Suppose then that you wake up. You’re not sure if the coin came up heads and tails—by halfer logic, you think they’re equally likely. Suddenly you hear a booming voice declare “it’s day 1.”

Now you get a 1,000:1 update in favor of the coin coming up tails. So now you are confident, just by finding out that it’s the first day, that a coin that hasn’t been flipped yet will come up heads. Thirders rightly think this is nuts!

So, halfing implies crazy presumptuousness and is doomed, QED, bing-bang-boom, etc.

Next, I argue that the main objection to the self-indication assumption is FAILED—SAD! This objection is called the presumptuous philosopher, nicely summarized by Bostrom:

It is the year 2100 and physicists have narrowed down the search for a theory of everything to only two remaining plausible candidate theories, T1 and T2 (using considerations from super-duper symmetry). According to T1 the world is very, very big but finite and there are a total of a trillion trillion observers in the cosmos. According to T2, the world is very, very, very big but finite and there are a trillion trillion trillion observers. The super-duper symmetry consid-erations are indifferent between these two theories. Physicists are preparing a simple experiment that will falsify one of the theories. Enter the presumptuous philosopher: “Hey guys, it is completely unnecessary for you to do the experi-ment, because I can already show to you that T2 is about a trillion times more likely to be true than T1!”

On SIA, the reasoning is correct—you should be almost certain that there are a trillion trillion trillion observers because that makes your existence a trillion times likelier. This seems crazy to a lot of people. Worse, it seems to undercut the thesis of my paper: the argument for SIA can’t be that it avoids crazy presumptions in favor of improbable events when it implies crazy presumptions in favor of improbable events. If it’s a problem that views other than SIA give you reason to think the future won’t have lots of people, shouldn’t it be a problem that SIA gives you reason to think the world does have lots of people.

I don’t think this last concern is well-founded, though. We often think improbable things have happened because they explain things we observe. The prior probability of Caesar crossing the Rubicon is low, but we should think it happened, because it explains our evidence. Similarly, more people existing makes your existence more likely, so it’s not weird to believe that more people exist. What is weird is to think that improbable things will happen in the future based on what will happen in the future if they happen. We can’t have this kind of evidence for improbable future things, because we haven’t yet observed the future evidence.

Thus, SIA implies you should think improbable things—akin to Caesar crossing the Rubicon—have happened. That’s much less problematic than thinking improbable things will happen, even if nothing yet has happened that is made likelier by those future events happening—because the only observable differences between them happening and them not happening will be in the future. In addition, I don’t think this presumptuous philosopher result is very problematic, as I’ll argue later, while the Lazy Adam result is crazy.

Why isn’t the presumptuous philosopher result crazy? I give four main reasons in the paper.

First of all, as Joe Carlsmith has pointed out, no one should actually reason the way the presumptuous philosopher does: the philosopher’s reasoning assumes certainty in the self-indication assumption. But no one should be certain in the self-indication assumption. All that it implies is that an ideal reasoner would reason like the presumptuous philosopher, but that doesn’t seem so weird.

Second, I think when one thinks about the situation in greater detail, it stops seeming unintuitive. Just as the odds any particular raspberry gets created are 1000x greater if 1000 times as many raspberries get created, the odds any particular person would exist are 1,000 times greater if 1,000 times as many people exist. But that means the odds you would exist would be 1,000 times greater if 1,000 times as many people exist. Thinking about it this way, the presumptuous philosopher result doesn’t seem weird at all! Thus, I think this isn’t a very convincing argument, for it assumes you’re not thinking about the case the way one who follows SIA would.

Third, every view is presumptuous. In the paper, I give a case called the presumptuous archeologist result:

Presumptuous Archeologist: archeologists discover that there was a type of prehistoric humans that was very numerous—numbering in the quadrillions. Over time, they uncovered strong empirical evidence that these beings were exactly like modern humans—certainly similar enough to be part of the same reference class. The archeologists give a talk about their findings. At the end of the talk a philosopher gets up and declares “your data must be wrong, for if there were that many prehistoric humans then it would be unlikely we’d be so late (for most people in your reference class have already existed). Thus, they can’t be in our reference class and therefore the data must be wrong.”

This view will follow from the self-sampling assumption, the main rival to SIA, which says you should reason as if you’re randomly drawn from the collection of people in your reference class, where your reference class is the collection of beings enough like you for it to be true, in some sense, that you could have been one of them. In fact, as I explain, every view other than SIA will imply similar presumptuousness—not just SSA:

However, I think this problem will apply to every view that updates on one’s existence and rejects SIA. If a universe with more prehistoric humans is no more likely to contain me, but a world with more prehistoric humans makes it less likely that I’d exist so late, then one has strong reason to reject the existence of many prehistoric humans. This can be seen once again in the same way: if I didn’t know when I existed, and the existence of more people like me doesn’t make my existence more likely, then I would initially assign equal probability to the hypothesis that there are many prehistoric humans like me and that there are few. Upon finding out that I’m not a prehistoric human, however, I’d get extremely strong evidence that there are few prehistoric humans. Thus, just as SIA proponents must disagree with the cosmologists, so too must those who reject SIA disagree with the archeologists!

Thus, alternatives about SIA imply similar presumptuousness. But their presumptuousness is even weirder: they think we can figure out how many prehistoric humans there were through anthropic reasoning. They think that from the fact that you’re alive now, you can be confident that there weren’t many prehistoric people. I think that’s crazy—the number of people other than you shouldn’t be relevant to probabilistic reasoning. SIA doesn’t care about how many people other than you there are—it just instructs you to think there are more people that you might be!

Fourth, the presumptuous philosopher is relevantly like the presumptuous hatcher result:

Presumptuous Hatcher: Suppose that there are googolplex eggs. There are two hypotheses: first that they all hatch and become people, second that only a few million of them are to hatch and become people. The presumptuous hatcher thinks that their existence confirms hypothesis one quite strongly.

The only difference between the presumptuous philosopher and presumptuous hatcher results is in terms of whether the people who don’t get created are assigned an egg. But surely that doesn’t matter to anthropic reasoning!! So, therefore, if we can show that the Presumptuous Hatcher result is correct, the same would be true of the presumptuous philosopher result. Fortunately, we have two separate arguments for the correctness of the presumptuous hatcher.

First, just in a straightforward probabilistic sense, the odds your egg would hatch are much higher on the first hypothesis than the second. So from the fact that it hatches, it seems that you should get strong evidence for the first hypothesis.

Second, I argue:

We can give an additional argument for the correctness of the presumptuous hatcher’s reasoning. Suppose that one discovered that when the eggs were created, the people who the eggs might have become had a few seconds to ponder the anthropic situation. Thus, each of those people thought “it’s very unlikely that my egg will hatch if the second hypothesis is true but it’s likely if the first hypothesis is true.” If this were so, then the presumptuous hatcher’s reasoning would clearly be correct, for then they straightforwardly update on their hatching and thus coming to exist! But surely finding out that prior to birth, one pondered anthropics for a few seconds, does not affect how one should reason about anthropics. Whether SIA is the right way to reason about one’s existence surely doesn’t depend on whether, at the dawn of creation, all possible people were able to spend a few seconds pondering their anthropic situation. Thus, we can argue as follows:

The presumptuous hatcher’s reasoning, in the scenario where they pondered anthropics while in the egg, is correct.

If the presumptuous hatcher’s reasoning, in the scenario where they pondered anthropics while in the egg, is correct, then the ordinary presumptuous hatcher’s reasoning is correct.

If the ordinary presumptuous hatcher’s reasoning is correct then the presumptuous philosopher’s reasoning is correct.

Therefore, the presumptuous philosopher’s reasoning is correct.

Thus, not only is the presumptuous philosopher result not a problem for SIA—it positively supports SIA. There are two good reasons to think that the presumptuous philosopher result isn’t implausible and two good reasons to think it’s correct. The main objection, therefore, to SIA is totally bunk!

This isn’t all I say in the paper—read it to get the full picture. I also challenge Cirkovic’s attempt to get out of the Lazy Adam result and argue, contra Katja Grace, that SIAers aren’t vulnerable to a similar doomsday argument. But hopefully, the broad picture is clear.

(I’m trying something different with publishing two articles in the same day, where one of them is just a podcast link. Let me know what you think: is it too much?)

Clearly, I get to count the praise given by people of alternative world copies of the paper, right?

Distinguished Professor of Anthropics when?

Congratulations, Matthew!