All My Writings On Utilitarianism

The response to Huemer and the utilitarianism wins outright series

Here’s part 2.

Contra Huemer on Utilitarianism Part 1

"The problem with this whole economics business is that it gets deeply unintuitive results. These economists talk endlessly about supply and demand curves. These graphs seem intuitive until you apply them to particular cases. Take the idea of a demand curve. It seems intuitive at first that at higher prices you purchase less of things. However, this general intuition gives deeply implausible conclusions, such as that the minimum wage causes unemployment, that rent control causes shortages, and that it's good when American's buy things from other countries, even though it costs American jobs. At this point this whole 'demand curve balderdash,' starts to seem absurd."

- A strawman

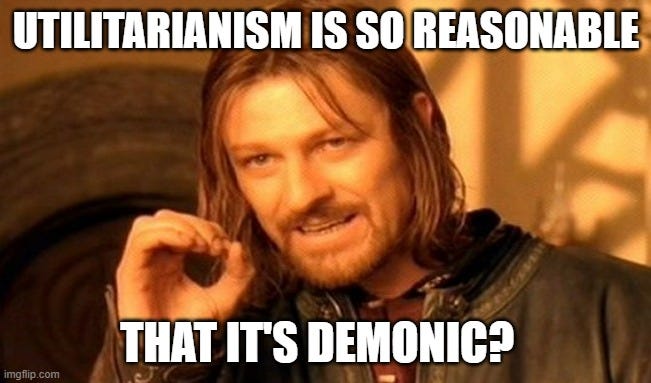

Michael Huemer is not a utilitarian for reasons explained in this article, illuminatingly titled "Why I Am Not a Utilitarian." As the name "Bentham's bulldog," might suggest, I am a utilitarian (I am not, however, a bulldog). Huemer has previously expressed some mild sympathy for utilitarianism, writing that it is "not a crazy view." However, as we learn in this article, our sanity does not justify trusting us with responsibilities as important as properly distributing cookies between serial killers and mother Theresa, or following death-bed promises.

Why is Huemer not a utilitarian? Well, Huemer is an ethical intuitionist, thinking that ethics must be based around making sense of a large number of particular cases. On Huemer's view, ethics should have acceptable intuitive implications. Thus, he rejects utilitarianism for the same reason one would be justified in rejecting a seemingly acceptable moral theory that they later learn holds that torturing infants for fun is justified. Huemer lays out a series of unintuitive results of utilitarianism and argues that these give us good reason to reject utilitarianism. Later posts will argue against those specific intuitions.

Huemer says that utilitarians biting the bullet is problematic (dentists tend to say similar things). He says "The utilitarian faces a dilemma: a) If you don’t accept ethical intuition as a source of justified belief, then you have no reason for thinking that enjoyment is better than suffering, that satisfying desires is better than frustrating them, that we should produce more good rather than less, or that we should care about anyone other than ourselves. b) If you do accept ethical intuition, then at least prima facie, you should accept each of the above examples as counter-examples to utilitarianism. Since there are so many counter-examples, and the intuitions about these examples are strong and widespread, it’s hard to see how utilitarianism could be justified overall."

I accept the second horn of the dilemma, but weakly. I think that ethical intuitions do provide some support to beliefs, but it very much depends on the nature of the intuition. Much like it would be absurd to reject economics based on intuitively compelling counterexamples, the types of intuitions that justify general principles are often more reliable than the types of intuitions about particular cases. If we look throughout human history we see a whole host of terrible moral intuitions. Huemer (rightly) argues that our practice of eating meat is the worst thing in the world. A practice that most people regard as permissible but Huemer regards as akin to the holocaust shows the frequent unreliability of our intuitions. For much of human history people regarded torturing and killing innocent people as permissible. Slavery was tolerated. All of this shows that our intuitions about particular cases are very often wrong.

Huemer asks how we can justify privileging utilitarian intuitions, writing "So how might one justify this? a. Maybe general, abstract intuitions are better than concrete intuitions about particular cases. Problem: It’s not obvious that utilitarian intuitions are any more abstract or general than non-utilitarian intuitions. E.g., imagine a case of a very selfish person causing harm to others, and you’ll get the intuition that this is wrong. Talk about the Shallow Pond example, or the Trolley Problem. It’s about equally plausible to say that core utilitarian claims rest on intuitions about cases like those as it is to make a similar claim about deontology. You can also represent deontology as resting on abstract, general intuitions, e.g., that individuals have rights, that we have a duty to keep promises, etc. It’s about equally plausible to say deontology rests on general intuitions like these as to say the same of utilitarianism."

There are numerous justifications for privileging utilitarian intuitions.

1) There seem to be good reasons for thinking that greater reflection leads to more utilitarian conclusions. Being smarter, less influenced by emotions, and more reflective all correlate with being more utilitarian. This is what we would expect if utilitarian intuitions were correct.

2) Utilitarians tend to be on the right side of history. Bentham supported legalizing homosexuality in the late 1700s. Mill was the second member of parliament to support women's suffrage. Sidgwick supported rights to education for women. Utilitarians like Singer are at the vanguard of the battle against factory farms, which Huemer regards to be one of the most important moral causes. Bentham's Bulldog is writing awesome articles filled with correct opinions, especially on ethical issues. A long track record of utilitarians weird an unintuitive ideas turning out to be right shows that we should privilege those intuitions.

3) Utilitarian intuitions realize that we should shut up and multiply--non-utilitarian intuitions don't.

4) Many non-utilitarian intuitions just seem to be emotional reactions to particular words in the sentence. We spend lots of time talking as a society about how lots of things are very bad--eg murder. Thus, when utilitarianism asks if murder is sometimes good, based on implausible stipulations that we have trouble imaging, the part of our brain that says "ick murder, that's bad," overpowers reflection about the particular case.

Consider a parallel question to many of the objections to utilitarianism. "Suppose that abusing one's spouse was the right thing to do. Should you do it." In this case, even though it's stipulated that abusing one's spouse is morally good, instinctively, one still has the urge to answer "no," or more accurately "get the hell out of my house." The non-utilitarian intuitions invokes concepts that are constantly discussed as being terrible--and for good reason. However, if we stipulate that those terrible things become good for strange reasons, often invoking unnamed people with whom we have no emotional connection, it's very hard come to the right (utilitarian) conclusion.

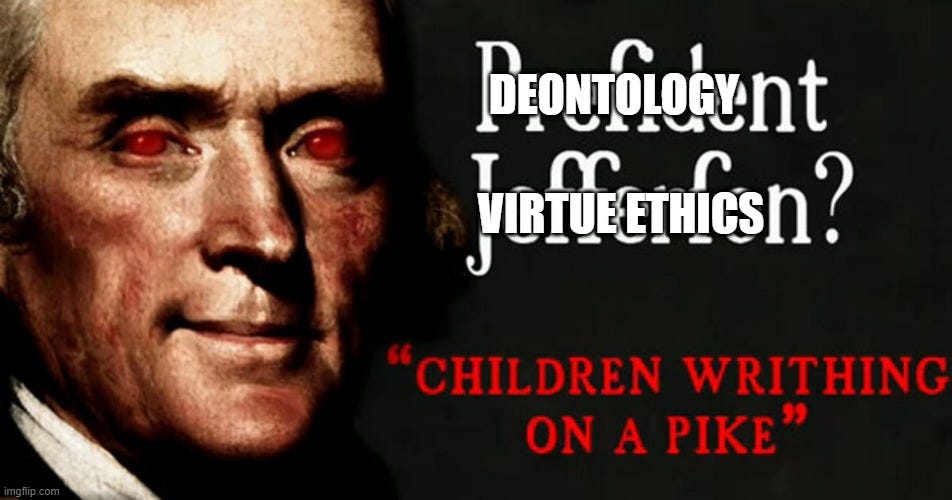

5) Perhaps most fundamentally, non utilitarian intuitions can't stop running into contradictions and absurdities. We'll see that more in the later parts of this series. Non-utilitarian's have to hold that third party observers should often hope for you to act wrongly, or hold that giving perfectly moral omniscient decision makers extra options can make the world worse.

On top of this, they have to posit an increasingly strange and convoluted moral ontology with various good making features, with no common tether.

Very often objections are given to utilitarianism, only for the utilitarian conclusion to be discovered to be rationally inescapable. People very often use the repugnant conclusion to reject utilitarianism. However, Huemer himself has argued that we should all accept the repugnant conclusion. They worry that utilitarianism justifies some number of dust-specks being worse than torture--even though that conclusion is rationally inescapable . As I argue here (in section 2) dozens of common counterexamples to utilitarianism are better described as cases where utilitarianism gets the correct but unintuitive results, and that we have independent reason to accept utilitarian judgements about each of these cases.

I, like god, am a bayesian. So we should consider the evidence for and against utilitarianism based on how likely it would be if utilitarianism were true, versus if it were false. If utilitarianism were correct we would expect it to often be counterintuitive, given how terrible human intuitions are about ethics. Thus, the fact that utilitarianism is unintuitive in many specific narrow cases is very weak evidence against utilitarianism. Given how much we'd expect the correct ethical view to diverge from intuition, it might on net favor utilitarianism. However, it would be very surprising for the incorrect moral view to have al of the following features.

1 Being more widely believed by smarter more reflective people.

2 Do much better in terms of theoretical virtues like simplicity.

3 Be derivable from a smorgasbord of plausible theoretical principles.

4 Have it's adherents be constantly on the right side of history hundreds of years ahead of their time.

5 Avoid strange contradictory results.

6 Generally only have counterintuitive implications in realms of sacred values.

7 Have alleged counterexamples to it almost always be independently debunkable on alternative grounds, such that smart people create theorem's demonstrating that believing the counterexamples produces absurdity.

Even Huemer said that he grows more sympathetic to utilitarianism as time passes, in his book Knowledge, Reality, and Value. The truth may appear false at first but when one considers enough ethical cases they realize that utilitarianism gets the right result every damn time, even in cases that were specifically designed to be counterexamples to utiltiarianism. So yes, utilitarianism has some counterintuitive results. But they're counterintuitive in the way that good economics is counter-intuitive. They're counter-intuitive in the way that Parfit's reductionism about personal identity is counterintuitive, or that quantum physics is counterintuitive.

David Friedman wrote “The modern economist reading Ricardo’s Principles feels rather as a member of one of the Mount Everest expeditions would feel if, arriving at the top of the mountain, he encountered a hiker clad in T-shirt and tennis shoes.” A similar experience is true of reading utilitarians, for utilitarianism solves the troublesome ethical questions of the modern age. Utilitarians are very concerned about animal suffering. Turns out there are good independent reasons to have that concern. Non-utilitarians have a very difficult time accounting for rights. However, all of the rights can be explained extremely easily on utilitarian accounts. The right to life is worth enshrining into law because it's conducive to utility. That's why we have the right not to let other people enter our house but not the right not to let other people look at our house. That's why it's a violation of our rights to shoot sharp projectiles at us, but not a violation of our rights to shoot sharp soundwaves at us by talking.

Political philosophers struggle with questions of whether or not taxation is theft. Utilitarianism provides a very easy answer: who cares. Call it what you want, but it's not bad if it's generally conducive to utility. Utilitarianism very easily solves problems in population ethics . The reason Huemer becomes more sympathetic to utilitarianism over time is because of the ease at which it solves (correctly) very difficult questions.

Prior to writing my post on the effective altruism forum I had not realized that utilitarianism could be verified to have correct results in all of the cases that I considered. For nearly all of them I had no idea what the independent arguments would be against the counterexample. But every single time it quickly became apparent on independent grounds that utilitarianism was right. Like Ricardo deducing modern price theory without working out the math, utilitarianism got the right answer every single time. For some of Huemer's examples, I have not already worked out independent justifications for "biting the bullet," on the alleged counterexamples. However, I have strong confidence that careful reflection will reveal a justification, for it always has in the past. Enough times of utilitarianism getting to the top of the mountain immediately, it's conclusion always intersected by a path forged by independent logical arguments, makes it very clear that the utilitarians are on to something.

In the bible, numbers 22-24 tells the story of Balaam and Balak. Balak was the king of Moab, and he wanted Balaam, a sorcerer, to curse the jews. However, every time he tried to curse the jews, he ended up accidentally blessing them (god has a tendency to make curses fail and become blessings). That somewhat reminds me of the tendency for objections to utilitarianism to backfire. Derek Parfit (a far greater individual than Balak, who also had the good fortune of existing, unlike Balak) tries to curse utilitarianism by devising a conclusion so repugnant that he names it the repugnant conclusion, arguing that it serves as a devastating counter-example to utilitarianism. Turns out that it's basically impossible to escape the repugnant conclusion without replacing one's diet entirely with bullets. Nozick and others curse utilitarianism with the charge of devaluing rights, only for independent arguments to reveal that non-utilitarian accounts of rights are superfluous, ungrounded, and absurd. Enough curses turned to blessings should reveal that utilitarianism is fundamentally right.

Utilitarianism is the modern price theory of ethics. It allows us to quickly reach the top of the mountain in just a T-shirt. People like Huemer are surprisingly diligent and resilient climbers, who manage to quickly reach places relatively near the top of the mountain. However, the mountain contains many treacherous turns--many of which result in ethical disaster, even relatively near the top of the mountain . I wish you well fellow travelers!

Contra Huemer on utilitarianism part 2

Organ Harvesting

Michael Huemer has an unfortunate reluctance to kill people and harvest their organs. This reluctance is so strong that he thinks that utilitarianisms toleration of killing people and harvesting their organs counts against it. Huemer gives the following case “a. Organ harvesting Say you’re a surgeon. You have 5 patients who need organ transplants, plus 1 healthy patient who is compatible with the other 5. Should you murder the healthy patient so you can distribute his organs, thus saving 5 lives?”

Of course. It’s notoriously difficult to disentangle the morality of particular acts and the morality of people who would take those acts. Those who would actually kill patients and harvest their organs would almost certainly be evil—in the real world we can’t have certainty that it will save five lives. Thus, this thought experiment is counterintuitive because it has a few characteristics.

1 It pits our sacred values of not killing people against some preventing some amorphous extra deaths. Excess deaths are far too common for people to get bent out of shape about them.

2 It presumes that extraordinary recklessness turns out to have good results.

3 It has the murder word which people tend to be averse to.

Maybe you’re still not convinced. Perhaps you believe in ghosts rights, and you think that not violating rights is morally more important than preventing other rights violations. However, beliefs in rights are wrong, so let’s right the wrong as is our right.

1 It seems a world without any rights would still matter morally. For example, imagine a world with sentient plants, who can’t move, where all harm is the byproduct of nature. It seems plants being harmed, despite their rights not being violated, is bad. We can consider a parallel case of a robot that does not experience happiness or suffering. Even though this robot acts exactly like us, it would not matter absent the ability to feel happiness or suffering. These two intuitions combine to form the view that beings matter if and only if they can experience happiness. This serves as strong evidence for utilitarianism.

One could object that rights are an emergent property of happiness, such that one only gains them when they can experience happiness. However, this requires deeply implausible strong emergence. As Chalmers explains, weak emergence involves emergent properties that are not merely reducible to interactions of the weaker properties. For example, chairs are reducible to atoms, given that we need nothing more to explain the properties of a chair than knowing the ways that atoms function. They are purely the result of atoms functioning. However, strongly emergent properties are not reducible to weaker properties. Chalmers argues that there is only one thing in the universe that is strongly emergent; consciousness. Whether or not this is true, it does prove the broader principle that strong emergence is prima facie unlikely. Rights are clearly not reducible to happiness; no amount of happiness magically turns into a right. This renders this claim deeply implausible.

2 Everything that we think of as a right is reducible to happiness. For example, we think people have the right to life. Yet the right to life increases happiness. We think people have the right not to let other people enter their house, but we don’t think they have the right not to let other people look at their house. The only difference between shooting bullets at people, and shooting soundwaves (ie making noise) is one causes a lot of harm, and the other one does not. Additionally, we generally think it would be a violation of rights to create huge amounts of pollution, such that a million people die, but not a violation of rights to light a candle that kills no people. The difference is just in the harm caused. Additionally, if things that we currently don’t think of as rights began to maximize happiness to be enshrined as rights, we would think that they should be recognized as rights. For example, we don’t think it’s a violation of rights to look at people, but if every time we were looked at we experienced horrific suffering, we would think it was a rights to look at people.

3 If we accept that rights are ethically significant then there’s a number of rights violated that could outweigh any amount of suffering. For example, suppose that there are aliens who will experience horrific torture, that gets slightly less unpleasant for every leg of a human that they grab, without the humans knowledge or consent, such that if they grab the leg of 100 million humans the aliens will experience no torture. If rights are meta ethically significant, then the aliens grabbing the legs of the humans, in ways that harm no one would be morally bad. The amount of rights violations would outweigh. However, this doesn’t seem plausible. It seems implausible that aliens should have to endure horrific torture, so that we can preserve our magic rights based forcefields from infringement that produces no harm for us. If rights matter, a world with enough rights violations, where everyone is happy all the time could be worse than a world where everyone is horrifically miserable all of the time but where there are no rights violations.

4 A reductionist account is not especially counterintuitive and does not rob our understanding or appreciation for rights. It can be analogized to the principle of innocence until proven guilty. The principle of innocent until proven guilty is not literally true. A person's innocence until demonstration of guilt is a useful legal heuristic, yet a serial killer is guilty, even if their guilt has not been demonstrated.

5 An additional objection can be given to rights. We generally think that it matters more to not violate rights than it does to prevent other rights violations. We intuitively think that we shouldn’t kill one innocent person to prevent two murders. Preventing a murder is no more morally relevant than preventing another death. A doctor should not try any harder to save a person's life on the basis of them being shot, than on the basis of them having a disease not caused by malevolent actors. I shall give a counterexample to this. Suppose we have people in a circle each with two guns that will each shoot the person next to them. They have the ability to prevent two other people from being shot, with another person's gun, or to prevent their own gun from shooting one person. Morally, if we take the view that one's foremost duty is to avoid rights violation, then they would be obligated to prevent their gun from shooting the one person. However, if everyone prevents one of their guns from being shot, rather than two guns from other people from being shot, then everyone in the circle would end up being shot. If it’s more important, however, to save as many lives as possible, then each person would prevent two guns from being shot. World two would have no one shot, and world one would have everyone shot. World one seems clearly worse.

Similarly, if it is bad to violate rights, then one should try to prevent their own violations of rights at all costs. If that’s the case, then if a malicious doctor poisons someone's food, and then realizes the error of their ways, the doctor should try to prevent the person from eating the food, and having their rights violated, even if it’s at the expense of other people being poisoned in ways uncaused by the doctor. If the doctor has the ability to prevent one person from eating the food poisoned by them, or prevent five other people from consuming food poisoned by others, they should prevent the one person from eating the food poisoned by them. This is obviously false.

6 We’ve already seen that all of the things that we thought of as rights are conducive to happiness generally. However, this is not the extent of the parity. The things we don’t think of as rights would start being treated as rights if it were conducive to utility. Imagine a world where every-time you talked to someone it busted their eardrums and caused immense suffering. In that world, talking would and should be considered a rights violation. Thus, being conducive to utility is both a necessary and sufficient condition for something to be a right.

7 We have decisive scientific reasons to distrust the existence of rights, which is an argument for utilitarianism generally. As Greene et al argue “A substantial body of evidence indicates that utilitarian judgments (favoring the greater good) made in response to difficult moral dilemmas are preferentially supported by controlled, reflective processes, whereas deontological judgments (favoring rights/duties) in such cases are preferentially supported by automatic, intuitive processes.”

People with damaged vmpc’s (a brain region responsible for generating emotions) were more utilitarian (Koenigs et al 2007), proving that emotion is responsible for non-utilitarian judgements. While there is some dispute about this thesis, the largest data set from (Fornasier et al 2021) finds that better and more careful reasoning results in more utilitarian judgements across a wide range of studies. They write “The influential DPM of moral judgment makes a basic prediction about individual differences: those who reason more should tend to make more utilitarian moral judgments. Nearly 20 years after the theory was proposed, this empirical connection remains disputed. Here, we assemble the largest and most comprehensive empirical survey to date of this putative relationship, and we find strong evidence in its favor.”

8 Rights run into a problem based on the aims of a benevolent third party observer. Presumably a third party observer should hope that you do what is right. However, a third party observer, if given the choice between one person killing one other to prevent 5 indiscriminate murders and 5 indiscriminate murders should obviously prefer the one killed to prevent 5 murders. An indiscriminate murder is at least as bad as a murder done to try to prevent 5 murders. 5 indiscriminate murders are worse than one indiscriminate murder, therefore, by the transitive property, a world with one murder to prevent 5 should be judged to be better than 5 indiscriminate murders. If world A should be preferred by a benevolent impartial observer to world B, then it is right to bring about the state of the world. All of the moral objections to B would count against world A being better. If despite those objections world A is preferred, then it is better to bring about world A. Therefore, one should murder one person to prevent 5 murders. This seems to contradict the notion of rights.

9 We can imagine a case with a very large series of rings of moral people. The innermost circle has one person, the second innermost has five, third innermost has twenty-five, etc. There are a total of 100 circles. Each person is given two options.

1 Kill one person

2 Give the five people in the circle outside of you the same options you were just given.

The person in the hundredth circle will be only given the first option if the buck doesn't stop before reaching them.

The deontologist would have two options. First, they could stipulate that a moral person would choose option 2. However, if this is the case then a cluster of perfectly moral people would bring about 1.5777218 x 10^69 murders, when then alternative actions could have resulted in only one murder. This seems like an extreme implication.

Secondly, they could stipulate that you should kill one person. However, the deontologist holds that you should not kill one person to prevent five people from being murdered. If this is true, then you certainly shouldn’t kill one person to give five perfectly moral people two options, one of which is killing one person. Giving perfectly moral beings more options that they don’t have to choose cannot make the situation morally worse. If you shouldn’t kill one person to prevent five murders you certainly shouldn’t kill one person to prevent five things that are judged to be at most as bad as murders by a perfectly moral being.

Rights have been officially debunked. They are no more. Yet there are some more specific objections to the organ harvesting objection.

First, there’s a way to explain it away sociologically. Rightly as a society we have a strong aversion to killing. However, our aversion to death generally is far weaker. If it were as strong we would be rendered impotent, because people die constantly of natural causes. Thus, there’s a strong sociological reason for us to regard killing as worse than letting people die. However, this developed as a result of societal norms, rather than as a result of accurate moral truth tracking processes. This intuition about the badness of killing only exists in areas where killing to save people is usually not conducive to happiness. Many of us would agree that the government could kill an innocent person in a drone strike, to kill a terrorist who would otherwise kill ten people. The reason for the divergence in intuitions is that medical killings are very often a bad thing, while government killings via drone strikes are often perceived to be justified.

Second, we have good reason to say no to the question of whether doctors should kill one to save five. A society in which doctors violate the Hippocratic oath and kill one person to save five regularly would be a far worse world. People would be terrified to go into doctors offices for fear of being murdered. Cases of one being murdered to save five would be publicized by the media resulting in mass terror. While this thought experiment generally proposes that the doctor will certainly not be caught and the killing will occur only once, the revulsion to very similar and more easily imagined cases explains our revulsion to killing one to save 5. It can also be reasonably argued that things would go worse if doctors had the disposition to kill one and save five. Given that a utilitarian's goal is to take the acts, and follow the principles who make things go best in the long term, a more valuable principle that entails that one does not take this act, can be justified on utilitarian grounds.

Third, we can imagine several modifications of the case that makes the conclusion less counterintuitive.

A) Imagine that the six people in the hospital were family members, who you cared about equally. Surely we would intuitively want the doctor to bring about the death of one to save five. The only reason why we have the opposite intuition in the case where family is not involved is because our revulsion to killing can override other considerations when we feel no connection to the anonymous, faceless strangers who’s death is caused by the Doctors adherence to the principle that they oughtn’t murder people.

It could be objected that even with family members the intuition is the same. Yet this doesn’t seem plausible, particularly if no one had any knowledge of the doctor's action. If no one knew that the doctor had killed the one to save the other five, surely it would be better for this to happen. An entire family being killed would clearly be less bad than one family member dying.

It could be objected that adding in family makes the decision making worse, by adding in personal biases. Yet this is not so. Making it more personal requires us to think about it in a more personal way. It is very easy to neglect the interests of the affected parties, when we don’t care much about them. Making it entirely about close family members matters, because we care about family. If we care about what is good for our family, then making the situation entirely about our family is a good way to figure out what is good, all things considered. Yet this is not the only case that undercuts the intuition.

B) Suppose that a Doctor was on their way to a hospital with organ transplants that would save 5 people, who would otherwise die. They see on the side of the road a murder that they could prevent, yet it would require a long delay, that would cause the death of the five people in the hospital. It seems clear that the doctor should continue to the hospital. Thus, when we merely add up the badness of allowing 5 to die, versus one murder the badness of five people dying outweighs.

C) Imagine that 90% of the world needed organs, and we could harvest one person's organs to save 9 others, who would live a perfect life. It seems clear that it would be better to kill the ten percent, rather than to let the other 90% die.

Finally, let’s investigate the principle behind not harvesting organs.

We could adopt the view NK, which says that one ought not kill innocent people. Yet view NK is clearly subject to many counterexamples. If the only way to stop a terrorist from killing a million people was by killing one innocent person, we should surely kill the innocent person. And most people would agree that if you could kill a person and harvest every cell in their bodies to save a million people, that action would be permissible.

WE could adopt view NKU, which says one ought not kill unless there is an overriding concern that involves vast numbers of people. Yet this view also seems to run into a problem.

It seems the objection differs depending on the context in which a person is killed. A terrorist using a human shield who is about to kill five people could be killed, yet it seems less intuitive to kill the person to harvest their organs. Thus, the badness of killing is context specific. This adds credence to the utilitarian view, in that the context seems to generally follow rules that determine if killing in most similar cases would make things go best.

We could take the view DSK, which says Doctors shouldn’t kill. However, this view is once again very easily explainable sociologically; it is very good for society that doctors don’t generally kill people. But in a deeper meta ethical sense it seems to make less sense.

We can consider a similar case that doesn’t seem to go against the obligations of a doctor. Suppose that a doctor is injecting their patients with a drug that will cure disease in low doses, but in too high of doses, it will kill someone. Midway through injecting the drug, they realize that they’ve given a lethal dose to five other patients, in another room. The only way that they can save them is by leaving immediately, and allowing their current patient from getting too high of a dose, who will die. It seems intuitive that the doctor should save the five, rather than the one.

One might object that the crucial difference is that the doctor is killing, rather than merely failing to save. However, we can consider another case, where the doctor realizes they’ve given a placebo to five people, rather than life saving medicine. The only way to give the life saving medicine would be to abandon the room that the doctor is in, much like in the previous example. It seems very much like the doctor should go to the other room, even though it will result in a death, caused by their injection. It seems clear that the cause of the lethal condition shouldn’t matter in terms of what they should do. As Shelly Kagan has argued that there is no plausible doing vs allowing distinction that survives rigorous scrutiny. Given the repeated failure to generate a plausible counter theory, we have reason to accept the utilitarian conclusion.

Additionally, imagine a situation where very frequently people were afflicted by flying explosives, which would blow up and kill five surrounding people, unless they were killed. In a world where that frequently happened, it starts to seem less intuitive to think we shouldn’t kill one to save five.

Humans follow patterns. Enough times of seeing “murder is bad,” leaves them to conclude that generally murder is bad. This corrupts their judgement about particular cases where the bad things about murder cease.

Alphazero was a chess playing AI that learned to play chess by playing against itself many times—never influenced by human strategy. As a result, it didn’t follow the common strategies and heuristics of humans, and it played much better than other AI. Humans were baffled by the strategies of alphazero and described it as playing chess like an alien. Utilitarian ethics is somewhat similar. It’s the optimal ethical system, but it often ignores the rules that humans use for ethics. Our bafflement at its seemingly strange mandates is no more surprising than bafflement at the chess of alphazero. Sometimes, heuristics hold us back. Yet as I hope to have shown, despite utilitarianism playing chess like an alien, it’s playing better chess.

Contra Huemer on utilitarianism part 3

A promise to keep

Content warning

Please do not read if you are planning on giving me money on your death bed that you hope I’ll deliver faithfully to your child, for reasons that will become apparent.

1 A not totally terrible action

The old man spoke to his contemporary, slowly dying. “My friend, you know I have never made a will.” His contemporary nodded. “I want you to tell everyone,” the man coughed, “I want you to tell everyone that when I am dead my money should be taken and delivered to the the top of mount Everest by Jim, who has a reasonable chance of death. He did, however, swear that he would do it.” His contemporary squirmed.

“Erm, why,” he asked, evidently deeply confused as the strange request.

“Well, that’s just reducible to psychological facts about me. I have a strange sort of desire. Also, my sons said they thought it would be really funny, though didn’t expect me to do it.”

His contemporary spoke more sullenly “my friend, surely you don’t mean that. Come, you must be addled, so near death.”

“No, no,” said the man. “I’m perfectly mentally fit. This extended short story wouldn’t really work if I were impaired now would it. Here, I’ll demonstrate. Probability of B given A equals the prior probability of B times the probability of A given B, over the probability of B. Oh also by the way, I’ve recently proven the Goldbach conjecture.” The man presented his proof of Goldbach’s conjecture in excruciating detail and it was correct.

“Okay, so you’re not addled,” said his contemporary. “Does anyone else know about this particular desire of yours.”

“No,” said the man. My children at one point just mentioned that they thought it would be funny. My children are already millionaires so they wouldn’t benefit from the money, the only way they’d benefit from it is if I put it at the top of mount Everest.”

“Well, even if they replied that it was funny, surely that doesn’t mean that they would actually want that to be the particular thing you’d do with your money, right.”

“No,” said the man. “I’ve actually derived from first principles that they’ll find it really funny. And before you doubt me, remember that I proved Goldbach’s conjecture very recently. In fact, I derived from first principles that they’d find it so funny that they’d chuckle about it for fifteen minutes every day.”

“But you said Jim will probably die.”

“Oh yes, and Jim provides for his family of five people, so they’ll probably die as well. However, Jim made a deal that he would bring it to the top of Mount Everest when I died, in exchange for one hundred thousand dollars. Jim was actually hypnotized to forget about it unless someone else brings it up, so Jim isn’t currently aware of my strange desire.”

The man coughed. “My friend, I will die within 30 seconds. Promise to me you’ll tell Jim to take my money up to Mount Everest.”

“I will.” Replied his contemporary.

The man died. His contemporary stepped out of the room. “Any last wishes or indication of what should be done with his money,” one person asked.

“He said it should be given to his sons,” the contemporary replied.

2 Replying to Huemer

You dear reader probably have the inclination that not following on his promise to save multiple people is, in the aforementioned case, justified. However, in this case, we’ve stipulated that you’re lying to a person on his death bed, in a way that would harm his son but likely save lives. Huemer thinks that a similar case provides a decisive counter-example to utilitarianism, writing “c. Deathbed promise

On his death-bed, your best friend (who didn’t make a will) got you to promise that you would make sure his fortune went to his son. You can do this by telling government officials that this was his dying wish. Should you lie and say that his dying wish was for his fortune to go to charity, since this will do more good?”

Yes! This is structurally analogous to the case I gave above. Why is it analogous? Well, a persons fortune can save many lives. The cost to save a life is about 4500 dollars. Presumably the mans fortune is more than 4500 dollars. Suppose that the mans fortune is a fairly modest 45,000 dollars. In this case, lying about the deathbed promise saves twice as many lives as sparing Jim from dying on the slopes of Everest. In both cases you are lying to a dying man to save the lives of many people, but in a way that fails to benefit his sons. This case is analogous to lying about lying to a murderer to prevent your room-mate from being murdered.

Utilitarianism gives us good reason to follow common moral heuristics like honesty. Being the type of person who adheres to promises nearly all of the time is conducive to utility. Thus, utilitarians can account for the value of the promise. If every-time a utilitarian made a promise they violated in cases where it maximized utility to do so, that general practice would not be conducive to utility.

Part 3 Another dialogue

The old man sat on his death bed and coughed. “My friend,” he said to his contemporary. “My wealthy sons do not need money. In contrast, ten daughters all have terminal illnesses that can be cured for 30,000 dollars total. However, I don’t like my daughters very much, so please give the money to my sons.”

“Why don’t you like your daughters,” asked the contemporary.

“A few reasons,” replied the man. “First, they’re very far away. I care less about people the farther away they are. Second, I have a lot of daughters, so each one matters less. Third, there are lots of other people who could in theory help my daughters, but are not doing so, so why should I. Fourth, they’re adopted, so they don’t have my super cool genes.” “And before you ask,” he added “here’s a decisive proof of Goldbach’s conjecture.”

“Promise me you’ll do this,” the man demanded.

“I promise,” replied his contemporary.

With adept timing, the man died. His contemporary emerged. “He wanted the money to be given to his daughters,” he said.

Part 4 This is actually a really good analogy if I say so myself and I do say so myself therefore, it’s a really good analogy (by modus ponens)

In the above case, the contemporary broke a promise to save the lives of ten people. However, the action seems clearly justified. In both cases, the fact that they’re far away, there are many people who can be helped, others could help in theory, and that they are not a family member is not morally relevant.

Why are our intuitions so far off? Well for one, even after hearing that we can save a child’s life for less than the cost of a cheap car, it’s very difficult to internalize that. Thus, when we think of donating, it’s hard to emotionally resonate with the full value of the action.

Part 5 Look, I know you’re probably getting tired of the analogies but I think they’re fun and some of you do too and also they illustrate why Huemer’s objection is wrong. So here’s another analogy, just bear with me on this one, much love, Bentham’s Bulldog.

The man approached his contemporary. “First things first, I’m not addled, here’s a proof of Goldbach’s conjecture yadda yadda,” he said.

“Second, here is all of my money. Please put it very deep in your pockets such that it would take about two minutes to get it out, given your enormous mazelike pockets. Deliver it to my sons and their family and don’t stop for anything, not even drowning children. Promise me this.”

“I promise,” said his contemporary, who was hoping for more dialogue like he’d had in the previous scenarios (he was an actor from Los Angeles). The man died, right after he’d given his 30,000 dollars to his contemporary.

His contemporary started to drive to give the money to the man’s sons families (not to be confused with the Manson family). On his way (shocker, I know) he saw 37 drowning children in a shallow pond. There was a button that was fairly deep under the water, a button which he could press to flip up the shallow pond, saving all 37 children. However, he would have to wade into the water, ruining the money and breaking his promise. “Hmm, this Bentham’s Bulldog guy makes a pretty compelling case for violating promises sometimes,” he said, before jumping into the water and saving the thirty seven children.

Part 6 Some more analysis of why the previous case is pretty analogous and also the above guy was not totally awful

The above scenario makes it clear that he should ruin the money and violate his promise to save many lives. It’s not clear what the morally relevant difference is between this and Huemer’s scenario.

Maybe the disanalogy is that the action is more direct. However, even if his jumping in the water was the only way to press a button that would save people on the other side of the world, it still seems like he should jump in. Maybe the disanalogy is that the scenario was unexpected. Well, people who make that disanalogy should read more carefully, because I specifically stipulated that the promise included not stopping for drowning children. These imaginary straw-men need to stop straw-manning my position.

Maybe the disanalogy is that other people have the ability to save the lives of the people who are dying of malaria. However, that doesn’t seem to matter. Suppose that there are other people who are standing by and doing nothing. Surely, he should still jump in.

Maybe the disanalogy is that there are endless people who could be saved, but it’s impossible to save them all. However, even if there were lots of extra drowning people, it doesn’t seem like that would weaken the obligation to save the 37 children.

Perhaps we think that the relevant difference is that only a very callous person would ask another to promise to ignore drowning children. However, even if in Huemer’s case the person is callous, it doesn’t seem to undermine the intuition. Additionally, the argument above was intended to show that failing to donate the money to givewell is a seriously wrong action, akin to ignoring drowning children. Finally, even if we suppose that the people on their death beds are saintly and have dilligently devoted their entire life to helping others, the intuition still applies.

Huemer might reply that in other cases where no one’s life is on the line—for example if the amount donated is only twenty dollars—the analogy disappears. However, in this case, a utilitarian wouldn’t endorse violating the promise. Being an honest person makes things go best. Honestly should only be violated when the stakes are large. If there were only twenty dollars worth of stakes, it wouldn’t be worth lying.

Sidenote: If you’re considering giving me any money that you hope me to faithfully deliver to your child when you’re on your death-bed, ignore everything I wrote, I deeeeeeeeefinitely would never violate the death bed promise :).

Sidenote 2: The reason I responded to Huemer’s objections out of order was because I got mixed up and was so invested in responding to this one that, by the time I realized I was out of order, I decided to finish this post.

Sidenote 3: The views expressed here are my own and do not reflect utilitarians broadly. I’m sure all of them would never in a million years break a promise made on a death bed to donate money.

Contra Huemer on utilitarianism part 4 (alternatively, a post to get me out of jury duty)

Why you should sometimes frame innocent people (though not usually).

(Something Michael Huemer is very against)

Michael Huemer has another objection to utilitarianism, thinking that it justifies framing innocent people to save lives, which he thinks is bad. Huemer writes “b. Framing the innocent

You’re the sheriff in a town where people are upset about a recent crime. If no one is punished, there will be riots. You can’t find the real criminal. Should you frame an innocent person, causing him to be unjustly punished, thus preventing the greater harm that would be caused by the riots?”

Yes!

One first point that can be made is that in most realistic situations, one ought not frame people. Thus we have strong reason to say no to the question of whether innocent people ought to be framed, even if we can imagine sparse situations in which it would maximize happiness to do so.

Second, we can explain away our revulsion sociologically, by appealing to the revulsion which we rightly feel for framing an innocent person.

Third, we can make modifications like the ones made in part five by making the people family members. Surely you would rather one family member was framed, rather than all your family members killed.

Fourth, suppose we could prevent either five murders from a lynch mob, or one innocent person from being framed and killed by a lynch mob. Surely we should prevent the former. One could appeal to the act omission distinction in this case. However, we can modify the case to avoid this.

Imagine a case in which a person (we’ll call him Tim) wrote an anonymous letter that would be delivered, which would frame an innocent person, who would be killed by the mob. However, after writing and mailing the letter, Tim had a change of heart, and decided to prevent the letter from being delivered. When he uncovered the stash where his letter was stored, it turned out to be in an iron box, with a robust security system, such that if two letters were taken out and destroyed, it would sound the alarm, and letters would be returned to their original location. As Tim is about to take out his letter, he sees another letter, which has the names of five people on it, who Tim knows are being framed, and will be killed by the mob. If Tim takes his letter out, he will not have framed an innocent person, and no one will be left worse off, as a result of Tim’s actions. However, if Tim takes out the letter containing five names, he will prevent a mob from killing five innocent people. In this case, it seems very intuitive to take out the letter with five names, yet it is very much like the earlier case. When one is taking out the letters, it should not matter who wrote the letter. Additionally, suppose one wrote the letter when they were asleep (sleep letter forging is considerably rarer than sleep walking, but not impossible). In that case, it seems even more bizarre to take out the letter, because it was written by them, rather than the five that would save more lives.

One could object that the cases are not parallel. However, the cases have been designed to be identical in morally relevant respects. In both cases, one is framing an innocent person to save five people. The only difference is that one framing is dragged out over a longer period of time, and is done over mail. Those, however, are not morally relevant differences.

Fifth, let’s imagine another parallel case. A mob is planning on killing a large number of people, because they think that they all conspired to do the crime. They believe that conspiring to do the crime is especially horrendous (they take a hardline stance against conspiracies), so if they believed that only one person committed the crime they would beat that person severely, but not kill that person. However, that person would otherwise be killed by the mob, along with everyone else. Thus, it would be better for literally everyone if that person is framed. In that case it seems intuitive that you should frame the person to reduce the severity of harm to them, and save everyone else’s life.

Sixth, we can consider a case similar to the case I appealed to when arguing against magic rights. Consider the following case.

There are 100 concentric circles, the first with one person, the second one with five, the third with 25, fourth with 125, etc. Each person has 5 corresponding people in the circle outside of them. (Eg, person 1 in circle one corresponds to all five people in circle 2, Jim in circle 2 corresponds to Mary, Sue, Edith, Rampampam, and Gulac in circle 3m etc.) The first 99 circles are comprised of perfectly moral people. Each person has two options.

They can either

1 Frame an innocent person

2 Give the five people corresponding to you the options you were given.

However, the people in the 100th circle will certainly frame the innocent person. If we hold that it’s not worth it to frame an innocent person to prevent 5 murders, presumably we’d hold that it’s not worth it to frame an innocent person to prevent five framings. However, if it’s not moral to frame an innocent person to prevent five framings then it seems clearly immoral to frame an innocent person to give a perfectly moral person two options, one of which is framing an innocent person. Giving perfectly moral people extra options can’t be bad, because if the extra option is worse than framing an innocent person they won’t take it. They’ll only take it if it’s better than framing an innocent person. Thus, we have the following conclusion.

If you should frame an innocent person to prevent 5 murders, you should frame an innocent person to prevent 5 framings. If you should frame an innocent person to prevent 5 framings then you should frame an innocent person to give a perfectly moral person two options, one of which is framing an innocent person. However, if this is true, then one ought to take option 2 always, because they should not frame an innocent person to prevent 5 perfectly moral people from having two options, one of which is framing an innocent person. Unfortunately, if everyone takes option 2, we’d have to stipulate that a ring of perfectly moral people acting rightly would result in 5^99 innocent people getting framed, when, had they acted differently, only one person would have been framed. This is far more unintuitive than the utilitarian conclusion.

Morality doesn’t care about who takes actions—it instead cares about what actions are taken. Our intuitions are wrong. This conclusion may seem repugnant. Fortunately, Huemer already accepts the repugnant conclusion.

Non utilitarians will have to do better than this rights nonsense in order to demonstrate a decisive counter-example.

Contra Huemer on utilitarianism part 5 (Alternatively, a post to get out of being forced to be an electrician during a sports game)

Why sports are maybe better than torture is bad

Note: This argument only applies to non yankees games—Huemer is obviously right in the context of yankees games.

“I hate torture as much as the next guy.

That much is truer

(than other false things) But I shut up and multiply

Unlike Mike Huemer”

—Mark Twain

Michael Huemer has another objection to utilitarianism, based on it subordinating torture avoidance to sports games. He writes “d. Sports match

A sports match is being televised to a very large number of people. You’ve discovered that a person has somehow gotten caught in some machine used for broadcasting, which is torturing him. To release him requires interrupting the broadcast, which will decrease the entertainment of a very large number of people, thus overall decreasing the total pleasure in the universe. Should you leave the person there until the match is over?”

Say it with me now, yes!! Let’s call this person Jones, as Scanlon did.

In this case, we can apply a similar method to the one applied to the repugnant conclusion, or torture versus dust specks. Suppose that we compare Jones’ situation to two people experiencing painful electric shocks that are only 90% as painful as Jones’ shocks. Surely it would be better to prevent the shocks to the two people. Now compare each of those two shocks to two more shocks, which are 60% as painful as Jones’ original one. Surely the 4 shocks are worse than the two. We can keep doing this process until the situation is reduced to a large number of barely painful shocks. Surely a large number of people enjoying football can outweigh the badness of a large number of barely painful shocks. A similar point has been made by (Norcross 2002).

Additionally, as Norcross points out, we regularly make similar trade offs. When we lower the speed limit, we recognize that some number of people will die, to increase the speed at which people can reach their destination.

If every bad thing is just as bad as a few things that are a little bit less bad than it, then we can keep swapping out bad things for more slightly less bad things, until we get to a point of lots of barely bad things. However, lots of barely bad things can obviously be outweighed by lots of barely good things.

Huemer could argue that, while there are some number of football minutes viewed that would be worth one torture, utilitarianism identifies the number as being way too low. If there’s some number of football viewer-ships that can outweigh the badness of a torture, it ceases to be a persuasive objection. Maybe Huemer says the number required would actually be 3 million, but utilitarianism says it would be 1.1 million. However, if we know there’s some amount, it’s hard to maintain that our ethical intuitions are at all reliable relating to the exact number of inconveniences whose badness equals that of one torture.

Aaaaaaaaaaaand now, for an overwrought dialogue, as must be included in every post.

Huemer: “You utilitarians think that some number of football games being seen is good enough to outweigh a torture.”

Much cooler utilitarian Winston Churchill: “As per the above principle, surely you’d agree that it would be worth it to allow one torture to continue to prevent all football from being canceled in one hundred billion worlds.”

Huemer: “I suppose so.”

Churchill: “Would you do it for 300,000 football games.”

Huemer: “Of course not. What kind of philosopher do you think I am.”

Churchill: “We’ve already established what kind of philosopher you are, now we’re just haggling over the price.”

Huemer: “There’s a big difference between 300,000 and 300 quadrillion.”

Churchill: “Would you do if for 300 million.”

Huemer: “Hmm—not sure.”

Churchill: “It seems strange that you claim that our ethical system is incoherent when you don’t even have an order of magnitude estimation of the precise amount.”

Huemer: “Well, whatever the amount is intuitively it seems like yours is waaaaay too much.”

Churchill: “Well, given that we make tradeoffs like this all the time it seems to reflect people’s revealed preferences. Additionally, if your intuitions were already wrong here, why trust that they’d be right about the precise amount, if they got the question of whether there was an amount wrong.”

Huemer: “I think intuition favors opposition to torture for reasons beyond the mere pain it causes.”

Churchill: “Sure, but presumably you think that based on other cases—cases which will be discussed in future articles. This was just showing that continuing torture for a football game is sometimes worth it. The point at which you’re tolerating some torture for the sake of continuing football games, your view seems intuitively insane to the average person. It’s like a schizophrenic person who thinks he’s jesus making fun of another person who thinks he’s Gandhi.”

Contra Huemer on utilitarianism part 6

Against cookies for desert.

(Let’s all take a moment to be amused by the cleverness of the pun).

Like most of you, I frequently have the opportunity to distribute cookies between Ted Bundy and Mother Teresa. Huemer worries that utilitarianism gets the wrong result, writing “e. Cookie

You have a tasty cookie that will produce harmless pleasure with no other effects. You can give it to either serial killer Ted Bundy, or the saintly Mother Teresa. Bundy enjoys cookies slightly more than Teresa. Should you therefore give it to Bundy?”

Those who have the intuition that it should be given to Ted Bundy presumably think so on the basis of thinking that reward should be proportional to how much one deserves the reward. However, the concept of desert runs into thorny ethical questions.

1 If it is good to punish bad people, then we should trade off a certain amount of pleasure with the punishing of bad people. To give an example of a metric, imagine we say that a year of punishment for bad people is good enough to offset an amount of suffering equivalent to one punch in the face. If this is true, googolplex bad people being punished for a year each, combined with as much suffering of benevolent people as googol holocausts, would be better than a world where everyone, including the unvirtuous bad people is relatively happy. Given the absurdity of this, we have reason to reject this view.

The retributivist may reply by arguing that there’s some declining value of retributivism, such that punishing one bad person is worth a punch in the face, but repeated punches in the face outweighs any amount of punishments of bad people. However, this is implausible, given the mere addition paradox. It seems clear that one torture can be offset by several slightly less unpleasant tortures, each of which can be offset by several even less unpleasant tortures. This process can continue until we get a large numbers of “tortures'' equivalent in pain to a punch in the face, being worse than a torture. If the number of bad people punished is large enough, it could thus outweigh the badness of horrifically torturing galaxies full of people.

They might hold the view that happiness for bad people is neither good nor bad. However, if they hold this view then they’d have to think that the moral relevance of one’s happiness doesn’t scale in proportion to how moral they are. If Ted Bundy’s happiness is morally neutral, then he would be equally deserving of happiness if he’d killed 100 extra people. This is implausible.

They might think that no one deserves to suffer but people deserve well-being inversely proportional to how moral they are, such that as a person tends towards being as immoral as Bundy, the moral relevance of their happiness tends towards zero. This runs into a few problems.

1 It would say that a great enough differential in cookie taste quality is worth giving to Bundy over Teresa, which is about equally counterintuitive.

2 It would say that the more bad things one does, the less marginal effect bad things have on their moral character.

They could bite the bullet, however, this is a view that’s so unintuitive that we have decisive reasons to reject it.

Here are some more objections.

2 (Kraaijeveld 2020) has argued for an evolutionary debunking of retributivism. It’s extremely plausible that we have an evolutionary reason to want to prevent people from doing bad things. It’s unsurprising that we feel angry at bad people, and want to harm them.

3 There’s an open question of how exactly we determine who to punish. Do we punish people for doing bad things? If so, should we punish politicians who do horrific things as a result of bad ideas? Would an idealistic communist leader who brings their country into peril be worthy of harm? If it’s based on motives, then should we punish egoists, who only do what makes them happy, even if they help other people for selfish reasons? If we only punish those who possess both characteristics, would we not punish nazi’s who truly believed they were acting in the greater good? Additionally, should we punish people who think meat is immoral, but eat it anyways? If so, we’d punish a large percentage of people.

4 Our immorality is largely a byproduct of chance. Many serial killers would likely not have been serial killers, had they been raised in a different family. Additionally, many violent criminals would not have been violent criminals had there not been lead in the water. Is it truly just to punish people for things that occurred outside their control, that are causally responsible for their crimes. As we’ve seen throughout history, if we’d been in nazi germany, we’d likely have been nazi’s. In scenarios similar to the Stanford prison experiment, we’d do horrible things. It seems arbitrary that one deserves to suffer because of particular events, such that in the absence of those events they wouldn’t intrinsically deserve to suffer. Desert should not be held captive to real world contingencies. Mother Teresa’s happiness shouldn’t matter hundreds of times more than the happiness of the average person.

5 This view runs into some other issues. Suppose that a person has thirty second every day when they turn evil. Would they deserve to suffer, but only for those thirty seconds? If they killed someone during those 30 seconds, would they deserve to suffer, even not during those thirty seconds. What if Ted Bundy had a change of heart? Would he still not deserve cookies? Suppose a person takes ADHD medication, which incidentally makes them more moral. Should they try to eat cookies when they’re on ADHD medication, because during that time they’re more moral. What if a person took a drug that made them temporarily a psychopath. Should they not eat tasty food during that time, so as to redistribute happiness towards themselves when they’re more moral. Either horn of the dilemma poses problems.

If they say that ones desert waxes and wanes over time based on how moral they are, this has several counterintuitive implications.

1 It would say that people’s happiness doesn’t matter during their dreams, to the extent that they do immoral things in dreams. At one point I had a very strange dream in which I killed many people (it occurred in a way that made no logical sense—as dreams often do. I think it has to do with a button or something that caused people to cease existing—it was hard to explain). In that dream, I clearly had no moral qualms about anything. (Note, this doesn’t reflect anything about me broadly, just like dreams make some nonsense ideas seem to make sense, this one made moral constraints not even factor into my consideration). It seems like my happiness in that dream is no less relevant morally than my happiness in any other dream.

2 It would say that people should redistribute the rewards that they’ll receive to occur when they think they’ll be maximally moral. For example, if one is planning on taking an ethics class, and thinks it’s more likely that they’ll be extra moral after the ethics class, this would say that they should save cookies for after the ethics class is done, even if they’d enjoy them less.

3 It would also say that a person who takes a drug that turns them into a psychopath who wants to kill people for a half hour deserves to suffer during that time (even if they’re locked up so that there’s no risk that they kill anyone).

4 It would say that the happiness of babies and small children matters proportionately less, because they’re less moral.

However, if they take the other horn of the dilemma, and argue that one’s desert remains unchanged over time, this runs into problems of its own.

1 It would say that Ted Bundy would become no more deserving of happiness if he reformed completely.

2 It would say that if Ted Bundy and mother Teresa switched morals, such that Ted Bundy became compassionate and mother Teresa became interested in killing lots of people, Teresa would be less worthy of cookies.

3 It becomes impossible to measure how moral someone is. If one starts out moral but becomes immoral, this metric doesn’t give us a way of determining what they deserve.

Perhaps you think that what matters is someone’s average moral character. Well, in that case, Bundy would be no more worthy of happiness prior to reforming than he would after reforming. Additionally, this view runs into a problem with mostly benevolent beings with very long lives. Suppose that a person is a horrendous serial killer for 1000 years, but after that they become perfectly moral. Given that not even mother Teresa is perfectly moral, on this account, this person would be more worthy of a cookie than mother Teresa, even when they’re going around murdering people.

Utilitarianism gives a perfectly adequate account of why punishing bad people is justified. Punishing bad people deters bad things. We develop an emotional attraction to punishing bad people, so we can feel good about it, as if we’re punishing those who deserve it. Yet the concept of desert is, at the end of the day, not what matters. Dessert, on the other hand, does matter.

Contra Huemer on Utilitarianism part 7

For torture--a non rights based defense

Subtitle is based on this.

“Then the Nazi’s tortured…”

—A story that never ends well

“What if (really bad thing) maximized utility. Utilitarianism says it would be good in that case."

—A lot of arguments against utilitarianism.

I, like Michael Huemer, don’t like Nazi’s. Huemer’s next objection to utilitarianism is the following.

“f. Sadistic pleasure

There is a large number of Nazis who would enjoy seeing an innocent Jewish person tortured – so many that their total pleasure would be greater than the victim’s suffering. Should you torture an innocent Jewish person so you can give pleasure to all these Nazis?”

A few things are worth noting about this case.

1 Making them sadistic Nazi’s makes it seem like a larger bullet to bite. Defending sadistic Nazi’s is never a good look.

2 In any plausible case in the real world torturing an innocent Jewish person will not make things go best. Even if Nazi’s pleasure that they experience when they torture an innocent Jewish person is prima facie good, it’s instrumentally bad. It would plausibly result in more torturing of Jewish people and require millions of Nazi’s who take immense pleasure in their suffering for it to increase overall suffering.

However, thought experiments don’t have to be realistic, so it’s perfectly fair game for Huemer to stipulate an unlikely situation. However, intuitions about farcical situations, where the net utility is precisely opposite of what it is in real world cases are likely to be unrealistic. Much like our intuitions about physics are likely to be less reliable if we stipulate very different situations—our intuitions about ethics are similar.

If the Nazi pleasure is good, then enough Nazi pleasure outweighs the pain of Jews being tortured. Thus, the person who holds Huemer’s view would seemingly have to accept that sadistic pleasure of bad people is not morally good.

Maybe you think that sadistic pleasure is necessarily bad. Well, this principle has compelling counter-examples. We can consider a man called Tim. Tim derives immense satisfaction from watching scenes that appear to depict tortures. Tim would never torture anyone and abhors violence. In fact, he sometimes feels guilty about his strange desires and donates vast amounts of money to charities. Tim also makes sure that the content that he watches that depicts people being tortured does not actually involve people being tortured. Tim spends hours searching for content that looks like people being tortured but has no actual people being tortured. Additionally, we can suppose that this is the highlight of Tim’s life. He enjoys it so much that, without it his life would be miserable. Despite suffering clinical depression, Tim finds the experiences so enjoyable that he regards his life as generally good. It seems in this case, Tim is truly made better off by the joy he derives from this sadistic content.

However, suppose additionally that, despite Tim’s incredibly careful selection process, Tim is deceived by an evil demon, who manipulates the laws of physics to make people actually be brutally tortured, despite any reasonable observer concluding that no one was truly being tortured. It seems that in this case, while the person who is tortured is made worse off by the torture, Tim is made better off by it. All else equal, it seems that making Tim enjoy viewing the torture less (assuming he’d view the same amount of torture) is bad.

Imagine another case of an alien civilization who views the suffering of humans. This alien civilization starts in a state of vast agony, yet becomes less miserable each time they watch a human suffer. If they view all human suffering their overall hedonic state drops to zero, when it starts off significantly worse than being boiled alive. Again, it seems like the aliens' sadism is not a bad thing.

If we think that enjoying the suffering of others is actively bad, independent of the suffering of others, then it would be morally good to make the aliens unable to see suffering. This is deeply implausible.

One might object that deriving pleasure from sadism is morally neutral, neither good nor bad. However, in the scenarios both posited, it seems obvious that the world is better because the aliens enjoy suffering enough to not be as miserable as beings being boiled alive. If the only way for the aliens to relieve their unfathomable agony was to torture one person this seems justified.

We can imagine another case of a person, Wyatt, who takes immense satisfaction in eating meat because he knows that the animal suffered. He feels deeply guilty about this fact, but cannot enjoy eating meat unless he knows that the animal suffered. Wyatt continues to eat meat, but donates to charities that help animals because he feels guilty. In this case, it seems that Wyatt enjoying the meat, assuming it won’t cause him to eat any more meat, is not a bad thing. To the extent that Wyatt enjoys meat because he knows about the suffering, and others enjoy meat that causes enormous suffering, but don’t care whether or not they know about the suffering, it’s hard to see how Wyatt’s meat enjoyment is any worse than any of ours. Much like there seems to be no morally relevant difference between a person who tortures other because they like when others suffer and one who likes the taste of people after they’ve been tortured, there’s no difference between one who enjoys the suffering of animals that they eat and one who merely enjoys the taste of the animals.

If Wyatt is morally no different from the rest of people when he eats meat, then either Wyatt’s sadistic meat eating is morally good or the joy that most people get from eating meat is morally neutral. However, this is deeply implausible. If meat tasted less good, but people ate the same amount of meat, that would be a worse world. If sadistic pleasure can be good, then enough sadistic pleasure can outweigh the badness of torture.

Additionally, there are many cases where people enjoy the suffering of others, which are not found objectionable. If the parents of a murder victim derive satisfaction from knowing that the murderer is rotting in prison, it wouldn’t be desirable to deprive them of that satisfaction.

Additionally, we can imagine a world exactly like our own, except humans would never be happy in their lives unless they, upon their sixth birthday, slap someone in the face and revel in the enjoyment. In this case, all of their enjoyment is coming from the fact that they slapped someone, but it wouldn't be good to condemn everyone to never being happy.

Additionally, we can imagine a scenario where every person will be given an extra year of happy life by torturing one person. In this case, their happy life is only existing because of the torture, but this case seems clearly justified.

Our anti-sadism intuition comes from the fact that sadism is not conducive to happiness. However, if it were conducive to happiness, it would be justified. In the cases like Schadenfreude, where we derive joy from the suffering of others in ways that make things go best, we don’t find it counterintuitive.

Maybe you think that what matters is avoiding sadistic pleasure from bad people. However, as the last article argued, attaching moral relevance to desert cannot be justified.

Perhaps you think that it’s actively bad when people derive sadistic pleasure from torturing people. If this were true, then in Huemer’s case most of the badness of the torture wouldn’t come from the torture—most of it would come from the Nazi’s getting pleasure. On this account, enough Nazi’s getting pleasure from the holocaust would be worse than the holocaust itself. This is deeply implausible.

Maybe you think that sadistic Nazi pleasure is morally neutral. This view runs into issues. Consider the above case about aliens. This alien would be horrendously miserable absent deriving pleasure from suffering. Let’s also stipulate that the alien is a Nazi’s and the only way to relieve their suffering is to torture one Jewish person. Absent one Jewish person being tortured the aliens hedonic state is more negative than the sum total of all suffering experienced by Jews during the holocaust. There is just 1 alien. In this case, it seems intuitively like the torture is justified.

Now we can switch up the case. Instead of there being one Nazi alien who experiences 100^100 times as much suffering as an individual, now there are 10^10000000 Nazi’s who start at a state of moderate unpleasantness which turns positive if a Jewish person gets tortured. In this case, there are so many Nazi’s that there’s more total Nazi utility. It doesn’t seem like lots of Nazi’s getting a little bit of pleasure is morally different from one Nazi getting a little bit of pleasure. Thus, if we accept that the Nazi alien should torture the Jewish person, we should also accept that the cluster of all the aliens should collectively torture the Jewish person.

This case may still seem a little bit counterintuitive. However, remember a few things.

1 If you had an equal chance of being all of the affected parties you would torture the person.

2 If you lived the lives of all of the people affected you’d be in favor of torturing the person. If we think of all bad things in the real world these two characteristics are not pleasant. If one was equally likely to be Ted Bundy’s victim as they were to be Ted Bundy, they would oppose Ted Bundy killing people. Likewise if you lived the lives of both Bundy and his victims.

Maybe you’re still not convinced and you think that it’s intuitively just too counterintuitive. I think your strong intuition is caused by a few separate intuitions that all combine in this case.

1 Retributivist intuitions, that say that bad people deserve to suffer.

2 Anti sadism intuitions, that say that it’s bad when people derive pleasure from the suffering of other’s.

3 General opposition to Nazi’s and to torture.

However, I think I’ve argued persuasively against the first two and the third is perfectly compatible with this view. Nazi’s and torture are bad because they cause lots of suffering.

A lot of people eat meat. When they eat meat, particularly from factory farms, they are getting pleasure from the suffering of others. Maybe not directly from the suffering, but that’s not morally relevant. If Nazi’s got pleasure from Jews being tortured because they thought it would deter more insidious Jews from doing bad things, rather than from pure sadism, that would presumably not change the moral status of the torture. So getting pleasure from a byproduct of torture seems morally equivalent to getting pleasure from torture. Thus, it seems like this view would likely commit you to thinking that torturing and killing one animal to give the entire world meat would be unjustified. This is deeply counterintuitive.

Maybe you think that there’s some fundamental difference between humans and animals, such that it’s fine to revel in a byproduct of the torture of animals. Then the question arises—in virtue of what does that difference arise (lots of arising in this sentence)?

I offer the following lengthy dialogue (signature of Bentham’s Bulldog posts).

Jim: “There’s a morally relevant difference between humans and animals that justifies the difference.”

Michael Huemer appears from nowhere with a bang (Huemer, like me, is strongly ethically opposed to current meat eating practices).

Huemer: “What’s the cause of the morally relevant difference?”

Jim: “Well, they’re a different species.”