Betting On Ubiquitous Pain

Pain in creatures of significantly debated consciousness: much more than you wanted to know

1 My basic view

'Cause everybody cries

Everybody hurts sometimesSometimes everything is wrong

—Rem “Everybody hurts”

You didn’t think the era of absurdly long posts was over, did you?

Prior to the 2024 election, there were a series of swing states that pundits forecasted could have gone either way. All of them ended up going to Trump by a fairly wide margin, as predicted by some people (e.g. Glenn, Keith Rabois). None of the states that were solidly blue went to Trump, but all the states that pundits and prediction markets weren’t sure about went red.

I have a similar bet regarding consciousness.

I suspect that all of the species that are currently of significantly debated consciousness—call them swing state species—are conscious. This would include crabs, lobsters, fish, and most insects, but it wouldn’t include oysters or AI. There’s fairly widespread agreement that such beings aren’t conscious. Oysters are like California.

Furthermore, I suspect that they all suffer fairly intensely—they don’t just have very basic slightly negative experience. This would include fish (though they are reaching the point where their suffering is beyond doubt), crustaceans (like shrimp, crabs, and lobsters), and insects.

Here is a thesis that seems decently intrinsically probable which I’ll refer to as the ubiquitous pain thesis: feeling pain is fairly ubiquitous across the animal kingdom, such that all the species of significantly debated sentience are sentient. I think, aside from the simplicity, plausibility, and naturalness of the thesis, there are five reasons to think it’s right that don’t require reviewing any complex behavioral evidence.

The first reason is evolutionary: it’s beneficial for a fish or insect to feel pain for the same reason it’s beneficial for you to feel pain; it encourages them to avoid dangers. If we hadn’t observed any creatures, we should expect decent odds that many creatures feel pain because of the obvious evolutionary reasons supporting pain.

Second, these creatures act in most ways like they’re in pain. If an insect or shrimp is exposed to damage, they’re struggle with great effort and try to get away. Either they are in pain or they evolved a response that makes them behave like they’re in pain. But if a creature struggles and tries to get away, a natural inference is that they’re in pain. This is why we’d naturally assume that, if we came across large aliens that responded aversively when we cut them, they probably felt pain, even if we hadn’t yet done studies on the nature of their brain.

If you look at how a dog acts when you hurt it, you’re justified in thinking it’s conscious even if you don’t know any neuroscience. For the same reason, we have justification for thinking that pain is widespread in creatures that act like they’re in pain.

Third, the inductive track record has clearly been in the direction of attributing pain to more and more creatures. Before 2002, the belief that fish couldn’t suffer was widespread. Over time, more and more creatures have been recognized as sentient, such that prior to the 1980s, it was widely believed that animals didn’t suffer at all. Thus, there’s a clear inductive trend towards more and more species being recognized as sentient. By induction, we should think we’re likely underestimating the scope of animal consciousness.

Fourth, there’s a probabilistic argument for this conclusion. Suppose one starts with a prior of .5 in consciousness being widespread across beings that act sort of like agents (including fish, insects, reptiles, and so on) and a prior of .5 in consciousness requiring specific brain regions. Conditional on consciousness being widespread, it’s guaranteed that there could be creatures like us that could sustain consciousness, while if consciousness is limited, then it might be that creatures like us can’t be conscious (perhaps only Reptile brains could give rise to consciousness or a kind of very advanced fish brain). Thus, given that we’re conscious, we should update in the direction of thinking more beings are conscious, just like upon seeing you have a bellybutton, ceteris paribus, you get evidence that more humans have bellybuttons (it’s unlikely you’d be the outlier).

Fifth, if one is a dualist, then they should have a preference towards attributing consciousness to fairly simple creatures, just as the other fundamental features of the universe show up at the very basic level. If one isn’t a dualist, then probably consciousness has to do with the right kind of information processing, yet even these simple creatures have brains that process aversive information.

When you combine these five arguments—behavioral, evolutionary, inductive, probabilistic, and theoretical (BEIPT, for short)—they make a pretty strong prior that most of the animals of debated sentience are sentient. The thesis that they’re in defense of is simple and natural—that a creature with a brain that processes stimuli negatively is conscious is a very plausible hypothesis.

Thus, even before looking at the specific experimental evidence, I think we should think it’s decently likely that the species of debated sentience are all conscious (though unlike Eliezer, I don’t come to my conclusions about animal consciousness from the armchair without reviewing any evidence). The most convincing evidence for this thesis that I’ll present is behavioral—I’ll argue that across each debated species, there’s convincing evidence of sentience. But before I do that, I’ll defend the judgment that these animals don’t just feel a small amount of pain, but instead suffer relatively intensely.

Section summary: there are behavioral, evolutionary, inductive, probabilistic, and theoretical reasons to suspect that animals of debate consciousness are conscious. Given that the hypothesis that pain is ubiquitous is simple and parsimonious, these considerations make it decently likely.

2 Intensely? What about neuron count?

The most basic reason to think animals suffer intensely is fairly simple: they act like they suffer intensely. They strain with great force to get away from harmful experiences. So long as we grant that they feel pain, from their behavior, it seems like they feel a lot of it. Note: here I’m not arguing that they do feel pain, just that if they feel pain it’s probably pretty intense. So just judging basically from animals’ behavior, they seem to feel pain relatively intensely. When lobsters are boiled alive, for instance, they struggle so much that chefs often leave the room because it’s so unpleasant to watch.

Suppose we were around in 1970, before much neuroscience on dogs had been done. We observed that when you put a dog in a cage, and then prod it, it strains with great force to try to get away. It would be reasonable to think that they suffer a good deal just because of their behavior. The same is true if we came across very brain-damaged humans—perhaps missing some important features of the brain and many neurons—aliens, or other animals. Yet this should also justify a presumption in favor of thinking animals feel a lot of pain.

The evolutionary considerations present in the last section give reasons to think that animals feel intense pain—being in a dazed, barely-conscious state isn’t evolutionarily advantageous. In fact, there might even be reason to think that animals feel pain more intensely than we do. If a creature isn’t very intelligent, it may need more pain to teach it to do something than if it can cognitively reflect. As Dawkins says:

Widely assumed human pain more painful than other species. But pain’s Darwinian purpose is warning: “Don’t do that again. Next time it might kill you.” So perhaps the more intelligent the species the less pain needed to ram warning home. Animals might suffer more than us.

Additionally, if a creature has a simple mind, it cannot think about anything other than the pain—when it hurts, its entire world would be pain. The worst sorts of pains are those that occupy our entire consciousness—yet for animals, this is probably true of literally every pain! This theme is explored well in Ursula Le Guin’s story Vaster Than Empires And More Slow. Ozy Brennan also depicts it well:

I am not sure if you have ever experienced 10/10 pain—pain so intense that you can’t care about anything other than relieving the pain, that you can’t think, that your entire subjective experience consists only of agony. Pain so intense that you are begging to be allowed to commit suicide because death would be better than enduring one more moment.

I have.

It was probably the worst experience of my life.

And let me tell you: I wasn’t at that moment particularly capable of understanding the future. I had little ability to reflect on my own thoughts and feelings. I certainly wasn’t capable of much abstract reasoning. My score on an IQ test would probably be quite low. My experience wasn’t at all complex. I wasn’t capable of experiencing the pleasure of poetry, or the depth and richness of a years-old friendship, or the elegance of philosophy.

I just hurt.

So I think about what it’s like to be a chicken who grows so fast that his legs are broken for his entire life, or who is placed in a macerator and ground to death, or who is drowned alive in 130 degrees Fahrenheit water. I think about how it compares to being a human who has these experiences. And I’m not sure my theoretical capacity for abstract reasoning affects the experience at all.

When I think what it’s like to be a tortured chicken versus a tortured human—

Well. I think the experience is the same.

Brian Tomasik also makes the point clear, arguing that what might matter to suffering is not how many signals there are aversive but what proportion of a brain’s signals are aversive. Such a thing would favor pain in smaller creatures who spend more of their cognitive architecture on feeling pain. Tomasik writes:

It may be that the amount of neural tissue that responds when I stub my toe is more than the amount that responds when a fruit fly is shocked to death. (I don't know if this is true, but I have almost a million times more neurons than a fruit fly.) However, the toe stubbing is basically trivial to me, but to the fruit fly, the pain that it endures before death comes is the most overwhelming thing in the world. There may be a unified quality to the fruit fly's experience that isn't captured merely by number of neurons, or even a more sophisticated notion of computational horsepower of the brain, although this depends on the degree of parallelism in self-perception.

Tomasik gives an example where 5 “I don’t like this” messages are processed by two individuals, one a human, the other a snail. For the human, these messages are slight in comparison to other things it’s experiencing, but for the snail, these are the only thing it’s experiencing. Intuitively it seems likely that the 5 “I don’t like this” messages would be felt more intensely by the snail, because it’s brain is unified in receiving that pain as its only signal, while for the human, it’s a small part of a larger picture. He then cites Daniel Dewey for the following point:

if the simplicity of the notional world an animal brain/mind/computer has decreases, it seems possible that each computation is more "intense" in that it represents a larger subset of the whole of possible spaces that mind can be in. So a simple pain not-pain system would experience cosmic amounts of pain. Whereas a complex intricate system, like us, would experience pain as a small subset of its processing.

A version of the argument from marginal cases can also be made in favor of thinking animals suffer intensely. Imagine we came across a human with very severe brain damage, missing much of his brain, with many higher functions eroded. However, despite this, he reacted like he was in pain, desperately struggling to get away. We wouldn’t assume that he was only barely conscious. So why do we assume this about animals?

Additionally, if we take the inductive trend discussed in the last several sections seriously—that we persistently underestimate animal sentience—such a thing should bias us in the direction of thinking that more animals are conscious rather than fewer.

Finally, the most detailed report ever compiled on the subject of animal sentience concluded that animals feel fairly intense pain. Shrimp, for instance, were estimated to feel pain, on average, 19% as intensely as we do. Furthermore, I think this is likely an underestimate—if one simply looks at the number of proxies that we expect to correlate with pleasure and pain or sentience, rather than (in my view arbitrarily) squaring or cubing things or relying on neuron count, they get an even higher estimate. To be clear, this isn’t a criticism of the report which I think was quite good, especially for being modest and trying to incorporate lots of different ways of measuring sentience—I think my judgments would line up more with the higher estimates, for reasons I’ve described.

The main way people argue that animals don’t feel much pain is by relying on neuron counts (see Anatoly Karlin, for instance). Because animals tend to have many fewer neurons than we do, it’s argued that they don’t feel pain. The problem is that neuron counts are a totally bogus way of measuring sentience, as Rethink Priorities argues convincingly.

In the brain, there’s just no correlation between neuronal activation or brain mass and sentience. No serious neuroscientist has proposed that we can measure the valence of experience by looking at the number of neurons, and often there’s an inverse correlation between neuronal activation and intensity of experience. Psychedelics, for instance, produce more intense experience by shutting down many brain regions. As the Rethink Priorities report notes:

For there also are a large number of studies showing an inverse relationship between brain volume and the intensity of particular affective states. In particular, when it comes to chronic pain, by far the most commonly cited relationship between brain volume and chronic pain is a decrease in brain volume in regions commonly associated with the experience of pain. For example, see the Davis et al. (2008) article “Cortical thinning in IBS: implications for homeostatic, attention, and pain processing”.

These seem to show that what matters for intensity of valanced experience is the functions performed by different brain regions rather than simply whether brain regions exist. But animals have brains that perform similar functions (e.g. fish brains perform similar functions to mammalian brains but in a different way). In addition, as RP notes, while neuroscientists have tended to think that changes in brain regions can detect whether a person is in pain, there are no indications that such things can be used to determine relative pain between different humans. Doctors don’t decide which of two people needs analgesia more by looking at their numbers of active neurons.

Were we to rely on neuron counts, we’d conclude that elephants have experiences much more intense than humans (for they have around 260 billion neurons and much greater brain size and mass). On this picture, despite pigs and elephants behaving mostly similarly, elephants would matter more than 500 times as much as humans. Children tend not to have many more neurons than adults, though they seem to suffer much more intensely.

Such a view also fits poorly with cases involving humans. There are numerous cases of people missing large chunks of their brains without it seeming to affect their experience. In one case, about 90% of a man’s brain was liquid (though it’s hard to know how many neurons were destroyed) yet he still seemed to experience normally. About half of another man’s brain was removed after a deadly accident, removing many of his neurons, yet this didn’t seem to affect his consciousness.

For this reason, we shouldn’t have a strong bias in favor of denying animal consciousness based on neuron counts or brain mass. It’s a totally bogus metric supported by almost nothing and undermined by lots of neuroscientific evidence. While it would be nice if it worked, the fact that it would be nice if P doesn’t, in fact, mean P.

Section summary: Animals probably aren’t just a bit conscious but feel pain intensely. This is supported by considerations involving behavior (they struggle as if they’re in a lot of pain), evolution (feeling only a bit of pain is less adaptive for it produces less significant action), learning (pain serves to teach animals not to do things, but a dumber animal may need a stronger signal to be taught a lesson), totality (for simple creatures, pain make up their total experience), inductive (we’ve consistently underestimates animal pain), marginal cases (we’d assume humans with similar behavior and dysfunction felt a lot of pain), and relative signal strength (for simpler creatures, pain signals make up a bigger part of their cognitive architecture. The pithy acronym is beltimr. The main reason for denying this relies on using neuron counts as a proxy for moral worth, but these are a horrible, terrible, no good, very bad proxy.

3 Fish

Okay, technically fish are a swing species in that there isn’t complete agreement, but there’s a pretty broad consensus. That fish feel pain is recognized by The American Veterinary Medical Association, The Royal Society for the Prevention of Cruelty to Animals, and the RSPCA. The broad scientific consensus is that fish feel pain.

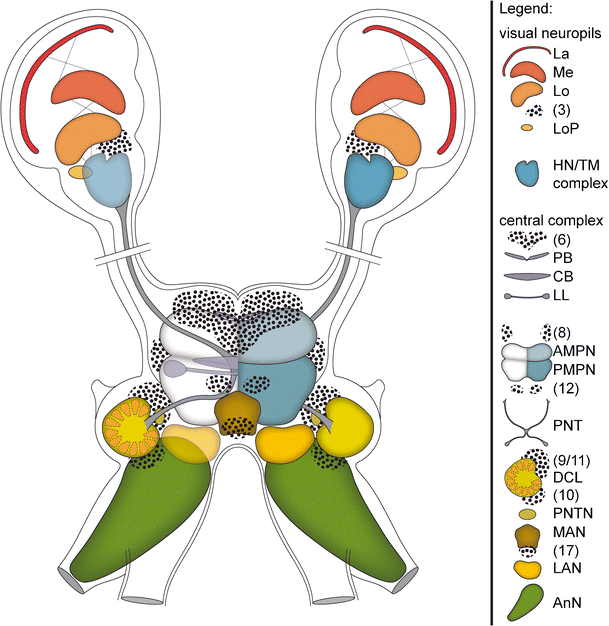

Lynne Sneddon released a report attempting to likelihood of pain in aquatic animals. The result—everything that we’d expect to correlate with feeling pain is exhibited in fish.

(Little sneak peak into the case for crustacean suffering).

One study found that when fish were injected with painful venom on their lips, they behaved differently, rubbing their lips against the side of the tank, using their lips less, swimming less, and rocking back and forth (engaging in repetitive tasks when in pain is also observed in humans and other mammals). I’d also behave differently if some asshole injected painful venom into my lips!

Their heart rate also went up, like ours does when we’re in pain. However, this effect was eliminated by giving them a painkiller. Such results are naturally made sense of on the hypothesis that fish feel pain that’s dulled by painkillers.

Another study also by Sneddon found that fish that were in pain would swim to a location they liked less if they were consistently given a painkiller while in the region. This means fish actively seek out painkillers, which makes a lot of sense if they’re in pain, but is otherwise rather mysterious. A report (also by Sneddon lol—this woman is everywhere!!!) finds:

Fish learn to avoid electric shocks usually in one or a few trials (e.g. Yoshida and Hirano, 2010). This avoidance behaviour persists for up to 3 days (Dunlop et al., 2006), but after 3 days of food deprivation fish will risk entering the shock zone to obtain food (Millsopp and Laming, 2008). This demonstrates that teleost fish find electric shocks so aversive they alter their subsequent behaviour.

One effect that pain tends to have is that it distracts people from other things. For example, if I’m trying to watch a soccer game, and then you kick me in the face, I’ll stop focusing on the soccer game. One way to test pain in fish, therefore, is to look at whether it distracts them from other things. Normally, trout are scared of new objects. However, when they’re in pain, they’re so distracted by the pain that they won’t pay attention to new objects, and won’t react aversively to them.

Trout also like hanging out with their friends. They make tradeoffs between this and receiving electric shocks, so that as long as the electric shocks in a region where their friends are hanging out are mild, they hang out with their friend. If they get too powerful, however, they avoid their friend (this is what I do with my friend—I’ll endure up to a 5 hz electric shock to hang out with

). This effect is eliminated by morphine.They also make similar sorts of tradeoffs between eating and electric shocks, making complex tradeoffs between degree of electric shock and food eaten. If they like food better, they’ll endure a greater electric shock to get it. Me too! I’ll endure a lethal dose of electricity to get a sufficiently good thing of vegan curry.

Fish also have pretty insane cognitive abilities. They can solve mazes and do all sorts of other complex tasks. Ozy notes:

Other cognitive abilities also matter. Cichlids and Siamese fighting fish can remember the identities of fish that they've watched fight. Cichlids are, in fact, capable of transitive inference. In one study, a cichlid "bystander" was allowed to watch other cichlids fight: A beat B, B beat C, C beat D, and D beat E. The cichlid was then placed in a tank with, say, B and D. The cichlid will reliably swim towards D, the weaker fish, even though they’ve seen both B and D win once and lose once.6 It’s not a status communication: a cichlid who hasn’t observed any fighting, if placed in a tank with B and D, is equally likely to swim towards either of them.

Fish also have various features that we’d expect to go with consciousness, being able to communicate and remember for months or years areas where they encountered predators so that they avoid them. While it’s conceivable that a creature could communicate without being conscious, being conscious certainly makes such capabilities more likely.

Additionally, there’s decent evidence that fish dream. But to dream, they have to be conscious. If they’re conscious, they can feel pain. Sure, it’s possible that they look and act like they’re dreaming but aren’t really dreaming, but it’s also possible that you look and act conscious but aren’t really conscious. If it looks like a duck and acts like a duck…

There are a few broad classes of objection to the idea that fish feel pain. The first involve claims that fish can’t feel pain because they don’t have the right brain regions. Such a view is quite controversial—Culum Brown writes:

The vast majority of commentaries, however, do not, and argue that fish most likely feel pain. Most agree that Key’s argument is flawed at best, and his evidence of how pain works in humans is selective, simplistic, misleading and outdated (Damasio & Damasio; Merker; Panksepp; Shriver). One emerging consensus, however, is that no single line of scientific evidence should over-rule any other, and many of the respondents agree that behavioural studies, such as conditioned place aversion, are an important component to understanding pain in human and nonhuman vertebrates alike.

The primary message from these commentaries is that Key’s argument is fundamentally flawed from an evolutionary perspective. He argues (although later denies it) that human brain architecture is required to feel pain. The mechanistic approach centres around the role of the cortex in human pain. But as Dinets, Brown, Ng and others point out, the human cortex has taken on a huge number of roles that once were the domain of other brain regions. To suggest that fish don’t feel pain because they lack a cortex, one would also have to write-off consciousness (Seth) or indeed any cognitive function that occurs in the humancortex. One example of applying this logic would be to conclude that fish are not capable of learning either. Clearly this argument is absurd (see Brown et al. 2011; Brown 2015 for reviews). Jones, Mather, Striedter, Elwood and Edelman all point out that Key’s approach also denies any possibility of convergent evolution, but surprisingly Key accepts that birds might provide an example of an independent evolution of a cortex-like structure. Haikonen, Manzotti, and Seth take it one step further and suggest that we don’t even understand pain in humans yet, and it is far too early to be making judgement calls on other taxa. The role of the cortex in human pain is still debated (Stevens; Damasio & Damasio) and is most likely not the “on-off switch” for consciousness (Segner). Walters argues that high levels of consciousness are not required for pain perception, a view which is consistent with evolution being a gradual process rather than occurring as all-or-nothing leaps and with the notion that pain is likely an ancient evolutionary trait.

Thus, it seems like the consensus view is that the brain regions that fish have probably are enough for consciousness (when a rat’s cerebral cortex is removed, they continue to function mostly normally, and some humans are born without cerebral cortexes). Additionally, even if human pain requires certain brain regions, that doesn’t mean that pain in general does. Humans and fish see in very different ways, yet both see—consciousness could be similar.

The other popular argument against fish pain is that after being injured, fish will go back to feeding normally within a short time. But this is entirely explicable on the assumption that fish feel pain—fish have more A fibers than C fibers. A fibers are responsible for quick, shooting pain, while C fibers are responsible for long-lasting dull-aching pain. Thus, fish might not as much dull long-lasting pain, but they certainly feel pain when hurt.

It’s true that fish nociceptors don’t always respond to the same stimuli as human nociceptors, but this isn’t an argument against fish nociception. Some mammals don’t have nociceptors that respond to acid, yet they’re still surely conscious.

Some fish, like sharks, are known as elasmobranchs—they’re bony (no offense). In elasmobranch fish, nociceptors have yet to be detected, and elasmobranch fish seem to shrug off injury to an insane degree. You can commit a super egregious microaggression against elasmobranch fish, and they’ll ignore it. Thus, one might think that elasmobranch don’t feel pain and use this as evidence against fish pain. But I think this is a bad argument (see also this post on the EA forum).

First, even if they don’t feel bodily pain, this tells us little about whether they can be in unpleasant states. The reason why they’d evolve to not feel pain is that they’re constantly getting bitten and injured in insane ways—e.g. they’ll often eat things with giant spikes. For such creatures, feeling pain wouldn’t be adaptive, so perhaps they no longer feel pain when injured. However, it’s still plausible that they’re conscious and can suffer.

Second, the reason why they might not feel pain is that there might be direct evolutionary pressure for them not to feel pain. But such a thing doesn’t apply to other fish more broadly. Thus, inferring from these creatures not feeling pain that others must not is a bad inference.

Third, we don’t really know if they have nociceptors. We haven’t discovered them yet, but that doesn’t mean they aren’t there (it took until 2002 to discover nociceptors in fish at all).

Fourth, the fact that they shrug off injury doesn’t show that they don’t feel pain. Some mammals shrug off injury to a great degree but still feel pain. Perhaps they need to really be injured to feel pain. As Max Carpendale notes in the above EA forum post, probably the sharks that don’t feel any pain would go the way of humans who don’t feel pain, being susceptible to constant injury.

Section summary: fish respond to painkillers, remember and avoid places where they were hurt, actively seek out painkillers, respond behaviorally as if they were in pain by exhibiting distress and rubbing the injured area, have physiological responses characteristic of pain, avoid using injured areas, and make complex tradeoffs between pain and gain. Everything about fish behavior when injured seems to suggest pain.

4 Shrimp shrimpy shrimpy shrimp (and other crustaceans)

(While we’re on the topic of shrimp, I’ve recently made the case that you should give money to the shrimp welfare project—in total, that article triggered a chain of events that helped almost half a billion shrimp. Some of this section—though not most of it—is repeating points I’ve made there).

Shrimp have most features that we’d expect to go with consciousness (like being my friends😊—I have a great relationship with the shrimp, they tell me “sir, you’ve really been doing a beautiful thing in terms of—swp, they call it swp, and that’s really a tough thing if you think about it, they’re ripping off the shrimp in a way that nobody could believe”). They communicate, integrate information from different senses into one broad pictures, make trade-offs between pain and various goods, display anxiety, have personal taste in food, and release stress hormones when scared. When shrimp are injured, they rub the wounded area in the way way animals do when in pain. Other crustaceans behave like we do when we’re in pain, being likelier to abandon a shell when they’re given more intense electric shocks, such that their abandonment is a function of both the desirability of the shell and greatness of the shocks. One study looked at the seven criteria that are the best indicators of pain:

(1) a suitable central nervous system and receptors, (2) avoidance learning, (3) protective motor reactions that might include reduced use of the affected area, limping, rubbing, holding or autotomy, (4) physiological changes, (5) trade-offs between stimulus avoidance and other motivational requirements, (6) opioid receptors and evidence of reduced pain experience if treated with local anaesthetics or analgesics, and (7) high cognitive ability and sentience.

It concluded that crustaceans, which include shrimp, lobsters, and crabs, score highly on every criteria. They have a complex nervous system that bundles together many kinds of information; exhibit avoidance behavior, so that they remember and avoid places where they were hurt; nurse injured areas and try to remove sources of pain; have physiological changes like dilated pupils in response to pain, just like we do; make trade-offs between pain and other goods; have opioid receptors and respond positively to painkillers; and exhibit complex behavior.

Their complex behaviors were especially cool. Lobsters demonstrate incredible navigation—having a much better sense of direction than I do. If a lobster is going somewhere, and you move it quite significantly, it can sense how it’s being moved and reorient towards where it’s going. I can’t do that! Hermit crabs use several different sources of information to learn about new gastropod shells, integrated into a broad picture, and can remember crabs they fought for up to four days. Whenever I fight a crab, I can’t tell it apart from the other crabs! Hard to imagine them doing that without being conscious.

In 2021, the London school of economics carried out an extremely detailed 107 page report on invertebrate sentience. While they ended up uncertain in many cases of whether invertebrates possessed some correlate of consciousness, in every case it was because of lack of data. Not a single piece of evidence they reviewed turned up evidence against the non-sentience of any crustacean. At the end of the report, they recommended extending legal protections to every invertebrate investigated.

A report (cited earlier when discussing fish) by Sneddon that looked at whether we have evidence that decapods (the kind of animal that includes shrimp, crabs, and the like) concluded that they meet almost every criteria that we have reason to expect goes with pain:

Consistently, either there’s no evidence about crustaceans or it ends up confirming their sentience. It never seems to go the other way. Like fish, if crabs are shocked in some location, they avoid it in the future, remembering it. This makes sense on the supposition that they feel and remember pain but is otherwise largely mysterious.

One thing that we’d expect to go with consciousness is individual personality differences. Humans have different personalities as do other animals that we know to be conscious. Oysters have no personality (sorry to all the oysters reading this).

Shrimp demonstrate behavioral differences, with some being more dominant and others being more docile. This provides more evidence against shrimp being merely complicated machines. Individual shrimp also have differing levels of risk aversion. Mere mechanisms tend not to display personality differences. Decapods (this includes shrimp, lobsters, and crabs) also nurse their wounds the same way that humans do.

Crabs display quite complex behavior regarding shells. One study by Robert Elwood found that hermit crabs make complex and impressive tradeoffs. Specifically they:

Integrate together information from multiple sense to come to an overall picture about the desirability of shells.

Form memories about shells.

Make complicated tradeoffs about the value of various shells.

When they get shocked in a shell, they trade off the value of the shell against the pain of the shock.

“Crabs also fight to get shells from other crabs, and again they gather information about the shell qualities and the opponent. Attacking crabs monitor their fight performance, and defenders are influenced by attacker activities, and both crabs are influenced by the gain or loss that might be made by swapping shells.”

“Hermit crabs show remarkable abilities, involving future planning, with respect to recognizing the shape and size of shells, and how they limit their passage through environmental obstructions.”

Hermit crabs assess whether shells will be available in the future, thus engaging in future planning.

“Groups of crabs arrange themselves in size order so that orderly transfer of shells might occur down a line of crabs.”

Decapods also exhibit a stress response. Shrimp and crayfish both seem to exhibit a stress response that produce chemical changes and risk aversion, and crayfish respond to anti-anxiety drugs given to humans. On the supposition that decapods don’t suffer, it’s utterly mysterious that they respond in every way—from reacting to pain killers to getting frightened to make tradeoffs—as if they were conscious.

One study even found that shrimp become exhausted, so that if poked once, they flick their tail, but if flicked many times, eventually they stop flicking their tail. They even seem to prioritize tending to their wounds over eating, delaying feeding for around 30 minutes when in pain. Most shockingly, crayfish self administer amphetamine, which is utterly puzzling on the assumption that they aren’t conscious. Hard to imagine that a non-conscious creature would have a major preference for drugs.

Not sure how much this counts, but around 95% of farmers in India, who deal with shrimp regularly, think they’re conscious.

Most convincingly is that nearly every investigation has turned up evidence for decapod pain. While it would be one thing if there were many studies pointing up in both directions, the general trend is that studies go the way that one who believed in decapod consciousness would expect, and their critics are left explaining them away. So what do those who deny crustacean consciousness say?

It’s often suggested that decapods like shrimp and lobsters don’t have brains. This isn’t true. Even penaeidae (the shrimp we generally eat) have brains, though they’re relatively primitive. You might be surprised that penaeidae shrimp brains give rise to consciousness, but it’s similarly surprising that human brains give rise to consciousness! If you look in a brain, you’ll never find qualia!

Others note that crayfish, for instance, only respond to certain types of painful stimuli. They don’t react aversively to chemical stimuli or low temperature stimuli. But this tells us little, as some mammals like the African mole rat have nociceptors that don’t respond to certain kinds of stimuli. It’s highly likely that decapods have nociceptors given that they’re ubiquitous across the animal kingdom, including in insects, and there’s behavioral evidence for nociception (a new study might have found them).

The standard arguments against invertebrate pain are weak. It’s just repeated that they have simple brains without very many neurons. But as described before, we don’t have good reason to think that simply brains mean dull consciousness or that neuron counts correlate with intensity of valenced consciousness. What matters more than number of neurons is the sorts of functions they perform, yet crustaceans nervous systems perform similar functions to ours.

Finally, one might think that only some decapods are conscious. Because penaeidae have simple brains, perhaps they can’t suffer but, say, crayfish can. This judgment could be supported by the limited research on shrimp. Here, however, three things are of note.

First, given the similarity between the various decapods, probably either they’re all conscious or none of them are. It would be surprising if only some decapods turned out to be conscious. This also best explains the consistent pattern across decapods of possession of features that correlate with consciousness.

Second, as discussed before, simple brains don’t necessarily mean simple consciousness.

Third, much of the evidence discussed has regarded shrimp. When hurt, shrimp show long-lasting unusual behavior and groom the affected area, yet this is reduced by anesthetic, and they have protocerebral centers linked to information integration, learning, and memory. They also display individual personality differences and taste preferences.

Fourth, my core claim in this article is that the evidence supports the ubiquity of pain thesis. If a creature thrashes and tries to get away when you hurt it, there should be a strong presumption in favor of thinking it feels a good bit of pain. This thesis is supported by suffering in fish and widespread suffering in decapods, and then if it’s right, it lends support to the consciousness of any particular creature.

Section summary, crustaceans (decapods more specifically) probably feel pain given that they:

Make tradeoffs between pain and gain.

Nurse their wounds.

Respond to anesthetic.

Self-administer drugs.

Prioritize pain response over other behavior.

Have physiological responses to pain like increased heartrate.

React to pain, trying to get away.

Remember crabs they fought for up to four days.

Remember and avoid areas where they suffered pain.

Integrate together lots of different kinds of information into one broad picture, the way consciousness does.

Engage in future planning.

Display stress and anxiety.

Have individual personality differences.

Respond to anti anxiety medication.

Seem to become exhausted.

5 Insects

I was doubtful of insect pain for a long time. My skepticism came mostly from hearing the sorts of claims initially made by an influential paper by Eisemann et al. Barrett summarizes these claims:

1. Insects lack nociceptors.

2. Endogenous opioid peptides and their receptor sites do not necessarily indicate the capacity for 91 pain perception.

3. Because insects have simple brains with relatively few neurons compared to mammals, they cannot generate the experience of pain.

4. Insects do not protect injured body parts.

5. Insects often behave normally after severe injuries.

6. Insect behavior is inflexible in a way that suggests that pain would have little adaptive value for them.

The problem is that all of these claims have been undermined by more recent research as Barrett and Fischer argue in an important paper. Nociceptors have been found in many different species of insects. Given the similarity of function across insects, probably many different insects have nociceptors. As for their second claim, while insects may lack endogenous opioid peptides that modulate pain in humans, they may represent pain in other ways.

As for the third claim about brain size, some insects have roughly similar brain size and neuron counts to some very small mammals, and we haven’t even studied the brains of the biggest insects. This becomes especially true when we consider the giant insects in the fossil record. Additionally, as discussed before, and furthered here, brain size is a poor measure of cognitive complexity.

As for the fourth claim, insects often show a dramatically decreased willingness to eat and mate (sometimes, in their case, at the same time) after receiving an injury. Insects increase grooming of an injured area, yet this effect vanishes if given morphine, a painkiller. It comes back if they were given naloxone which cancelled out the morphine. After getting a leg injury, insects, in fact, place less stress on the injured leg and often limp and move more steadily.

Barrett and Fischer also provide an explanation of where other studies might go wrong: often there’s a delay between the insects being harmed and the tests on them. Insofar as insects, like perhaps fish, feel momentary shooting pain but not chronic pain, perhaps by that time, the injuries are no longer painful.

It’s true that sometimes insects sustain significant injury and keep going about their business as if nothing is wrong. However:

Even in vertebrates, response to injury is hugely context dependent. We only have major behavioral responses to some kinds of injury, and often the behavioral change is unpredictable.

The reason it’s beneficial for us to feel pain from injured body parts is largely that it makes us put less stress on that area. Yet such factors play a much smaller role regarding insect body parts given their physiology and size.

There are examples in humans and other mammals of responding normally in response to injury—such a thing sometimes occurs with soldiers injured in combat or prey injuries that walk on injured limbs. When Mike Tyson is being viciously hit in the head, he acts like he isn’t in pain.

Eisemann’s claim that injuries often lead to animals continuing to walk normally came from visual observation. He didn’t do a study, he was just writing about things he’d seen from some insects over the years. Yet humans are not good at visually observing and intuiting the behavior of creatures as different from us as insects. Anecdotes tell us nothing of the prevalence of these behaviors. While Eisemann claims that insects don’t respond negatively to, for example, cannibalism, in fact they often fight back quite furiously.

Insects respond quite broadly to noxious stimuli—for instance, shock and high heat. While there might be rare cases where insects don’t seem to react aversively to injury, almost all the time, they do. Barrett and Fischer finish this section:

To summarize: the central plank of Eisemann’s case against insect pain is based on unsystematic observations, without due consideration of prevalence, mechanism, context, or biomechanics, of a limited class of negative stimuli that lead to behaviors that are ripe for misinterpretation. However, when we recall key differences in morphology and biomechanics between insects and other animals, these behaviors may be less surprising in soem cases. Additionally, more systematic observations of insects reveal more familiar responses to mechanical damageand demonstrate context-dependent repair. Finally, a broader view of the relevant noxious stimuli—notably, shock and heat—demonstrate that insects do not behave “normally” in response to injury. Though there is more to say here, these points suffice to show that the behavioral evidence against insect pain is not nearly as robust as Eisemann suggests.

Regarding Eisemann’s final claim that insect behavior is inflexible and mechanical, this has been overturned by more recent research. Insects make tradeoffs between injury and reward and are capable of learning.

As for the positive evidence that insects feel pain, Gibbons et al released a detailed 75-page report on insect sentience. Across 8 criteria for consciousness, insects met either 6 or 3-4 of the criteria. Of the criteria they did not meet, however, it was never because there was contrary evidence, but always because not enough research had been done. No research ever suggested an insect failed a criteria. Building on previous work, the authors suggested the 8 criteria most relevant to assessing feeling pain are:

1. Nociceptors: The animal possesses receptors sensitive to noxious stimuli (nociceptors).

2. Integrative brain regions: The animal possesses integrative brain regions capable of integrating information from different sensory sources.

3. Integrated nociception: The animal possesses neural pathways connecting the nociceptors to the integrative brain regions.

4. Analgesia: The animal’s behavioural response to a noxious stimulus is modulated by chemical compounds affecting the nervous system in either or both of the following ways:

a. Endogenous: The animal possesses an endogenous neurotransmitter system that modulates (in a way consistent with the experience of pain, distress or harm) their responses to threatened or actual noxious stimuli

b. Exogenous: Putative local anaesthetics, analgesics (such as opioids), anxiolytics or anti-depressants modify an animal’s responses to threatened or actually noxious stimuli in a way consistent with the hypothesis that these compounds attenuate the experience of pain, distress or harm.

5. Motivational trade-offs: The animal shows motivational trade-offs, in which the disvalue of a noxious or threatening stimulus is weighed (traded-off ) against the value of an opportunity for reward, leading to flexible decision-making. Enough flexibility must be shown to indicate centralised, integrative processing of information involving an evaluative common currency.

6. Flexible self-protection: The animal shows flexible self-protective behaviour (e.g., wound tending, guarding, grooming, rubbing) of a type likely to involve representing the bodily location of a noxious stimulus

7. Associative learning: The animal shows associative learning in which noxious stimuli become associated with neutral stimuli, and/or in which novel ways of avoiding noxious stimuli are learned through reinforcement.

8. Analgesia preference: The animal shows that they value a putative analgesic or anaesthetic when injured in one or more of the following ways:

a. Self-administration: The animal learns to self-administer putative analgesics or anaesthetics when injured.

b. Conditioned place preference: The animal learns to prefer, when injured, a location at which analgesics or anaesthetics can be accessed.

c. Prioritisation: The animal prioritises obtaining these compounds over other needs (such as food) when injured.

Regarding the first, as discussed before, many insects have nociceptors. Even in cases where we haven’t yet detected nociceptors, this is mostly because the subject is underexplored. As for the second, insect brains integrate together many kinds of information, and nearly all adult insects have “mushroom bodies” (neither mushroom nor body—who named these things!!??) which give them the ability to learn, remember, and process sensory information. Regarding the third, studies on cockroaches have shown that their brains integrate the nociceptive information. While people often remark on the fact that insects can survive for a bit without a head, cockroaches no longer have coordinated responses to harmful stimuli after missing their head, showing that the head integrates pain signals. (Also, chickens can survive for a bit without a head, but that doesn’t mean they’re not conscious).

The fourth provides the most evidence: insects respond to noxious stimuli by trying to get away. However, this affect is blunted by a painkiller, which makes insects more willing to suffer harm. If given a painkiller, they’re more likely to endure heat for the sake of a reward. Bees avoid areas where they’re given electric shocks, yet this effect is reduced if given a painkiller.

Fruitflies also seem to get depressed. If they’ve been treated badly, when placed in water, they lie dormant for a bit, not moving. This is very similar to how depressed humans often lay in bed and remain inactive. Legend has it that when lying in the water, fruitflies like to eat icecream and binge their favorite shows. However, giving them anti-depressants causes them to start moving more quickly. They also display states akin to anxiety; after subject to danger, they flee to dark areas and arena edges.

Regarding the fifth, insects make complicated tradeoffs between pain and gain (I know I have already used that phrase twice, but it’s really a great phrase—it rhymes). When deciding whether to jump across heat to access food that they enjoy, they make tradeoffs between the degree of the heat and the light. Bumblebees make complex tradeoffs between sugar water and pain, such that which place they choose to fix is a function both of the sugariness of the water and the heat they need to endure to get to it. Note that in this case they remember the tastiness of the water and the degree of the heat, which they learn to identify from the color of the feeder, and then use this to make future decisions.

Regarding the sixth, insects engage in flexible self-protection, the way many other creatures do. Many insects nurse their own wounds and, in the case of ants, the wounds of other injured ants.

Regarding the seventh, insects display associative learning. If an adult fruit fly is shocked after being exposed to a smell, they later consistently avoid that smell. Similar things are true across many different kinds of insects, including flies who, after being exposed to just one painful stimuli, remember what was happening. Gibbons et al conclude:

Overall, there are multiple studies demonstrating nociceptive associative learning in adult Blattodea, Diptera, Hymenoptera, Lepidoptera, and Orthoptera, as well as last instar juvenile Diptera and Lepidoptera, so we have very high confidence that these orders fulfil this criterion at these life stages.

Rethink Priorities commissioned a very detailed report on the probability and degree of insect sentience, analyzing both bees and black soldier flies (the primary ones that are being farmed and fed to farmed fish). They found a large amount of evidence for insect sentience. One study they cite involved making bees engage in complex tasks that required backtracking to get reward:

We therefore trained bumblebees with two types of task that we believe represented challenges unlike any they have evolved to respond to. These involved dragging various-sized caps aside and rotating discs through varying arcs away from the entrances of artificial flowers (via detours of up to three body lengths), to access a reward hidden beneath. Further, we successfully trained bees to use disc colour as a discriminative stimulus predicting whether rotating discs clockwise or counterclockwise would reveal the reward. This true, complex operant conditioning demonstrated that bumblebees can learn novel, arbitrary behavioural sequences, manipulating and moving items in ways that seem far from any natural task that they would encounter, and doing so flexibly in response to specific discriminative stimuli. This adds to growing evidence of impressive behavioural plasticity and learning abilities in bees, and suggests new approaches for probing their cognitive abilities in the future.

Not only can they rotate disks in complex formations that they didn’t evolve to do, honey bees communicate, including through a complex waggle dance that takes into account the location of the sun. As Peter Curry (substack here) writes:

The waggle dance is a line dance performed by honey bees - an individual bee discovers a food source, and boy, is she excited (the vast majority of bees are female). She arrives back in the hive, and begins ferociously waggling while running in a line, then does a semicircular loop and starts the dance again. The angle that the bee runs in relation to the vertical is the angle that the food source is relative to the sun. If a bee runs straight upwards, the food source is in the direction of the sun. The distance the bee runs is proportional to the distance to the food source.

As you may have surmised at some point, the sun moves. The dancers factor this in, and as they spend longer performing the dance, they change the angle of their dance in order to factor in the movement of the sun. And if the sun is behind a cloud? Bees are sensitive to polarised light, and can infer the location of the sun from this light.

An important detail here is that it is generally dark inside hives. Other bees can’t actually see the dance. Instead, bees that dream of foraging put their feelers on the dancer's abdomen, and hold them there while the dancer shimmies. It’s probably very arousing if you’re a bee. Bees have specific dialects of their waggle dance language, and some bees can learn the dialects of other bee languages if they spend enough time on Duolingo and don’t get killed by the foreign bees.

(Sidenote: Peter should have won the ACX book review competition. He would have if it weren’t for MASSIVE AMOUNTS of MAIL IN BALLOTS. He just needs 11,000 votes, Brad, give me a break).

Curry also notes that bees have awareness of their bodies—when flying through an area they shift position depending on whether they need to approach it diagonally, sideways, or go around. Additionally, bees seem to dream in a way similar to mammals. He writes:

Bees need to sleep. If they don’t get their beauty sleep, their dance moves get worse. If you expose bees to odours while they are in a phase of deep sleep, they begin to consolidate memories from the previous day, and their memories improve. Rats do a similar thing, repeating activation patterns from the previous day in their hippocampus. Does this suggest these creatures are dreaming?

Most impressively, bees figure out tool use. If a lever manipulates a pully, making it possible to get sugar when pulled, many bees learn to pull it. Yet the dumbass bees who didn’t learn to get the sugar initially are able to learn from watching the bees around them. In addition, bees integrate multiple senses together to form one cohesive picture of the world.

The Rethink Priorities report concluded that almost every consciousness proxy has been shown to be displayed in bees. For the ones that aren’t, with the exception of transitive inference, this is only because of an absence of evidence, rather than evidence showing that they don’t engage in some behavior. This includes:

Displaying individual personality.

Foregoing temporary benefit for greater long term reward.

Not acting on one’s impulses.

Exhibiting a pessimism bias (thinking, if they’re been exposed to new positive and negative stimuli at an equal rate, probably the next stimuli will be beneficial).

Skill at navigating.

Making tradeoffs between pain and gain.

Recognizing numbers (if bees were offered some reward when offered, say, 4 things, even of different types, they learned to get excited when seeing four things).

Problem solving.

Responding cautiously to novel experiences.

Quickly identifying when some reward conditioning has been reversed (for instance, if a creature is initially rewarded when a bell is rung and then they’re shocked when it’s rung, they quickly learn to dread the bell).

Learning from others.

Mentally representing where in space other creatures are.

Discounting rewards longer in the future.

Using tools to manipulate a ball.

Judging which of two things it regards as more likely to happen (bees opt out of difficult trials, in favor of easy ones, to try to get a reward).

Being anxious.

Learning from pain.

Fidgeting in response to stress.

Parental care.

Being afraid.

Being helpful.

Self medicating.

Having their response be modified by pain killers.

Comparatively assessing the relative value of different nectars, and other potential rewards.

Disliking particular tastes.

Now, bees are among the most impressive insects. But even black soldier flies, a generally unremarkable kind of insect (that we’re tormenting by the trillions) meet a lot of these. Specifically, black soldier flies have the following consciousness proxies:

Communicating.

Cooperating.

Integrating and learning across multiple senses.

Individual differences.

Pessimism.

Integrating lots of different sensory inputs into one broad picture.

Navigating.

Quickly identifying when some reward conditioning has been reversed (for instance, if a creature is initially rewarded when a bell is rung and then they’re shocked when it’s rung, they quickly learn to dread the bell).

Anxiety.

Associative learning from pain.

Depression (after being mistreated, they don’t move when put in water for a while, like depressed humans).

Pain responses that sometimes get worse without any more stimuli (like in humans).

Aversion to particular tastes.

People find it unintuitive that insects suffer intensely, but I think this is most likely the result of bias. Insects are very small—probably the reason people sympathize more with lobsters than bugs is that bugs are very small. We have very different intuitions about giant prehistoric intuitions like the one below than, say, houseflies, though they’re similar:

Additionally, as Brian Tomasik notes, caring about insects is inconvenient. It makes morality messy, where everything we do is constantly killing creatures that can feel (though I suspect this doesn’t count against it as most insects live quite wretched lives). Based on the fact that insects look weird—in the words of Kendrick Lamar, “they not like us,”—caring about them is inconvenient, and they’re very small, we’re predictably biased against them.

In sum, then, we have lots of evidence that insects are conscious: they display complex behaviors that provide evidence of consciousness, act as we’d expect them to act if in pain (making tradeoffs, responding to anesthetic, nursing wounds, remembering pain and avoiding painful areas in the future, displaying associative learning), and much more.

6 Concluding remarks

Here I have argued for what we might call the ubiquitous pain thesis: all the creatures of significantly debated consciousness are conscious. Probably every creature that has a brain and nociceptors is conscious, and such things are most likely ubiquitous. Additionally, I’ve argued there’s a reasonable probability these creatures suffer a lot. While some of the evidence is species specific, there are broad trends—nearly all the creatures investigated:

Respond to analgesic.

Nurse wounds.

Make tradeoffs between pain and reward.

Have physiological response to pain.

Learn to avoid places where they were in pain.

Display symptoms of anxiety.

Have individual personality differences.

Integrate many different bits of information.

Possess nociceptors.

I’ve provided 5 a priori arguments for the ubiquitous pain thesis, which, being simple and parsimonious, should start out pretty plausible: ubiquitous pain is evolutionarily beneficial, such a thing would explain the behavioral reactions in animals of trying to avoid harmful stimuli, the odds that we’d be conscious are higher if consciousness is more widespread than more limited, consciousness being widespread makes better sense under dualism (where consciousness is fundamental) and physicalism (where it probably just involves some type of information processing), and the inductive trend shows that humans tend to underestimate the frequency of animal suffering. In addition, I’ve reviewed experimental evidence from many creatures of debated consciousness—fish, insects, and crustaceans (decapods, to be precise)—and argued that these all support the ubiquitous pain thesis.

The thing that was most convincing—more than any specific experimental result—is the broad trend. As the data rolls in, it consistently provided more and more evidence for consciousness in the species of debated consciousness. There were many cases of creatures seeming to exhibit consciousness but no cases of any solid evidence against species exhibiting consciousness. One who started with the assumption that animals aren’t conscious would have consistently incorrectly predicted experimental results, while the opposite is true of those who started with the assumption that animals are conscious.

I’ve also argued that pain in simple creatures is likely pretty intense. If pain serves to teach creatures a lesson, then simpler creatures would need to feel more pain, and creatures with simpler cognition would have their entire consciousness occupied by pain. In addition, the behavioral evidence reviewed by Rethink Priorities seems to suggest that it’s likely that many animals experience a lot of pain—they react quite strongly for stimuli, not like a creature in a dull, barely-conscious haze. I’d bet at 66% odds that most insects, fish, and decapods are conscious and would say that conditional on them being conscious, there’s a 70% chance they feel relatively intense pain. If this is right, then it makes a strong case for taking insect and shrimp suffering quite seriously and giving to organizations working on reducing their suffering.

Sadly, it seems like all the swing state species are conscious.

This is one of my favorite things you've ever written. Incredibly informative.

Re the relationship between neuron count and intensity: You raise good arguments, yet I'd still be surprised if people who study this stuff concluded a single neuron could feel pain. It seems like there is a relationship between neuron count and pain (as well as sentience more broadly), just not a linear one, and not one we understand well.

I will answer to this in some detail, while of course for me more than 3 pages is malpractice:-)

Let suffice now to say that if “pain” is penalty in the utility function of the neural network, you are obviously right.

A different thing is if there is a “self” to suffer that penalty. I can program a neural network with extreme aversion to this or that, but the consciousness of the structure depends on the network to be complex enough to have a self able to suffer.

No amount of behavioral evidence would convince you that a Boston dynamics dog suffers, and the reason is that you need more than behavior. As commented in the Shrimp post, a broad extension of the moral circle needs a broad theory of consciousness. If you are a naturalistic dualist, and think that consciousness is epiphenomenic, the difficulty is big and metaphysical.